semantic segmentation

1.Introduction to Semantic Segmentation

The next deep learning feature we will introduce in this tutorial is semantic segmentation. Semantic segmentation is based on image recognition, except that classification occurs at the pixel level rather than the entire image. This is achieved by convolving the pre trained image recognition backbone, which converts the model into a fully convolutional network (FCN) that can be labeled by pixel. Segmentation is particularly useful for environmental perception, as it generates dense per pixel classifications of many different potential objects for each scene, including foreground and background.

SegNet accepts 2D images as input and outputs a second image with per pixel classification mask coverage. Each pixel of the mask corresponds to the classified object category. SegNet can be used from Python and C++.

Download other models

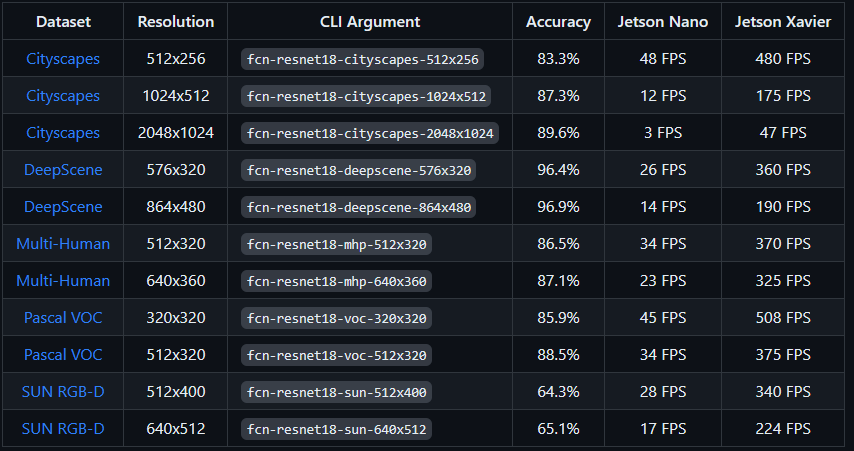

Various pre trained segmentation models for FCN-ResNet18 networks with real-time performance on Jetson. The following is a table of pre trained semantic segmentation models available for use, as well as the relevant network parameters used to load them. They are based on the 21 class FCN-ResNet18 network, trained using PyTorch on various datasets and resolutions, and exported to ONNX format to load TensorRT.

Here, you can download the model you want based on the website: https://github.com/dusty-nv/jetson-inference

2.Image semantic segmentation

The following is an example of using urban landscape models to segment urban street scenes:After building the project, please ensure that your terminal is located in the aarch64/bin directory:

cd jetson-inference/build/aarch64/bin

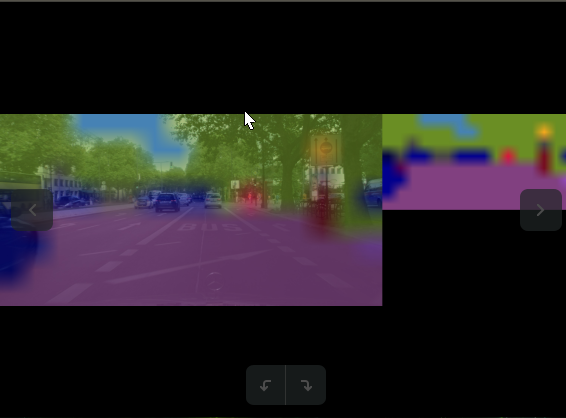

Here are some examples of using the fcn resnet18 cityscapes model:

--Network=You can put your downloaded model files in this. For example, what I have here is fcn resnet18 cityscapes# C++

x# C++$ ./segnet --network=fcn-resnet18-cityscapes images/city_0.jpg images/test/output.jpg# Python$ ./segnet.py --network=fcn-resnet18-cityscapes images/city_0.jpg images/test/output.jpg

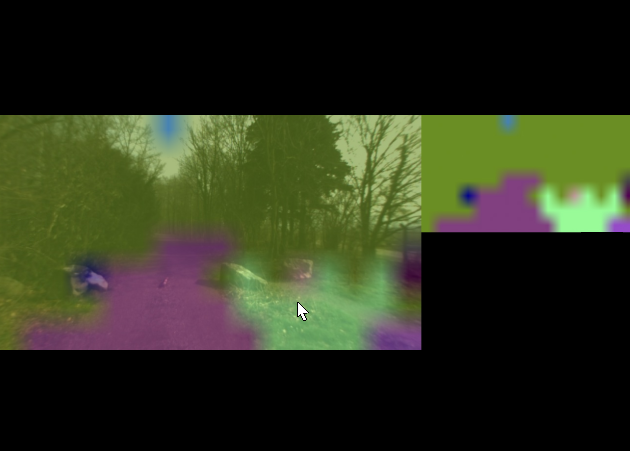

The following example is a DeepScene dataset composed of off-road forest paths and vegetation, which helps with path tracking for outdoor robots.

C++

xxxxxxxxxx$ ./segnet --network=fcn-resnet18-deepscene images/trail_0.jpg images/test/output_overlay.jpg # overlay$ ./segnet --network=fcn-resnet18-deepscene --visualize=mask images/trail_0.jpg images/test/output_mask.jpg # mask

python

xxxxxxxxxx$ ./segnet.py --network=fcn-resnet18-deepscene images/trail_0.jpg images/test/output_overlay.jpg # overlay$ ./segnet.py --network=fcn-resnet18-deepscene --visualize=mask images/trail_0.jpg images/test/output_mask.jpg # mas

Note: If you are building your own environment, you must download the model file to the network folder to run the above program. By using the image we provide, you can directly input the above program

3.Run real-time camera segmentation demonstration

The semaet.cpp/semaet.py samples we previously used can also be used for real-time camera streaming. The supported camera types include:

- MIPI CSI cameras ( csi://0 )

- V4L2 cameras ( /dev/video0 )

- RTP/RTSP streams ( rtsp://username:password@ip:port )

Here are some typical scenarios for starting the program - related to available models

C++

xxxxxxxxxx$ ./segnet --network=<model> csi://0 # MIPI CSI camera$ ./segnet --network=<model> /dev/video0 # V4L2 camera$ ./segnet --network=<model> /dev/video0 output.mp4 # save to video file

python

xxxxxxxxxx$ ./segnet.py --network=<model> csi://0 # MIPI CSI camera$ ./segnet.py --network=<model> /dev/video0 # V4L2 camera$ ./segnet.py --network=<model> /dev/video0 output.mp4 # save to video file

The model we can choose from here is fcn resnet18 mhp.The OpenGL window displays a real-time camera stream overlaid with segmented outputs, as well as a solid segmentation mask for clarity. Here are some examples to try using with different models:

xxxxxxxxxx# C++$ ./segnet --network=fcn-resnet18-deepscene csi://0# Python$ ./segnet.py --network=fcn-resnet18-deepscene csi://0