Yolo5+Tensorrt acceleration+DeepStream

Yolo5+Tensorrt acceleration+DeepStream1.Precautions before use2.instructions2.1 Model Transformation2.2 Deployment Model2.3 Modify the deepstream configuration file (this step can be omitted for YAHBOOM version images)3.Compile Run

1.Precautions before use

If you are using the YAHBOOM version of the image, there is no need to build the DeepStream environment. If you have built your own image, you need to build the environment for DeepStream. You can refer to the DeepStream building tutorial we provide, or you can also build your own Baidu

2.instructions

2.1 Model Transformation

xgit clone https://github.com/marcoslucianops/DeepStream-Yolo.git cd DeepStream-Yolo/utils cp gen_wts_yoloV5.py ../../yolov5cd ../../yolov5python3 gen_wts_yoloV5.py -w ./yolov5s.pt2.2 Deployment Model

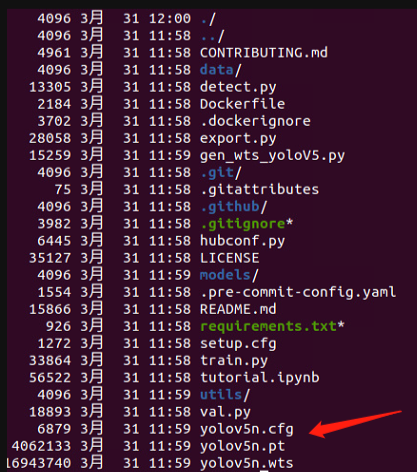

- After successfully running the previous step, two files will appear in the directory of yolov5,yolov5n.cfg and yolov5n.wts

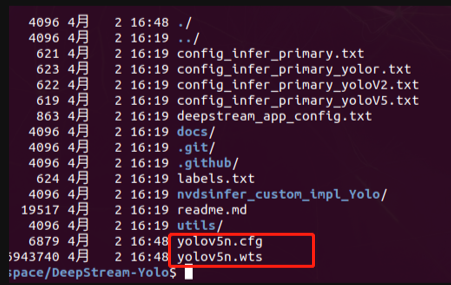

- Place yolov5n. cfg and yolov5n. wts in the DeepStream Yolo folder of Jetson orin nx

2.3 Modify the deepstream configuration file (this step can be omitted for YAHBOOM version images)

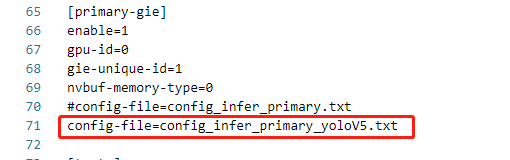

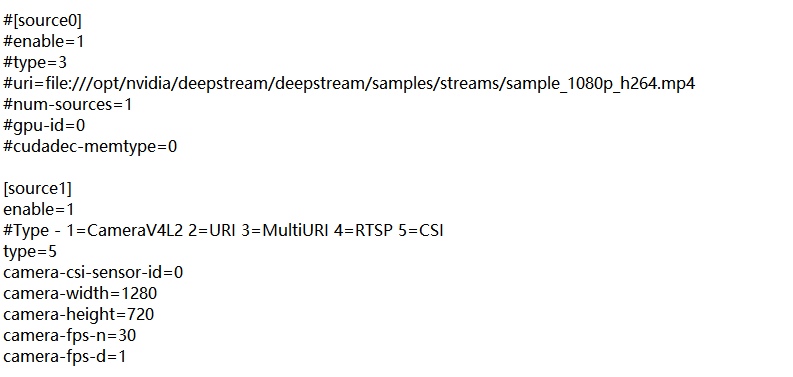

- Modify Deepstream_ app_ Config.txt file

The modified content is as follows:

Comment on 70 lines, add a line after:

config-file=config_infer_primary_yoloV5.txt

As shown in the figure:

- Modify the second configuration file config_infer_primary_yoloV5.txt

xxxxxxxxxx[property]# omit ...**model-engine-file=model_b2_gpu0_fp16.engine** # fp32->fp16batch-size=2 # batch-size Change to 2, the speed will be faster# omit...**network-mode=2 #** 2:Force the use of fp16 inference# omit ...

Note: FPS is related to parameters such as input image size, batch size, interval, etc., and needs to be optimized according to practical applications. Here, we directly change the batch size of the input to 2, which will significantly improve the inference speed of the model

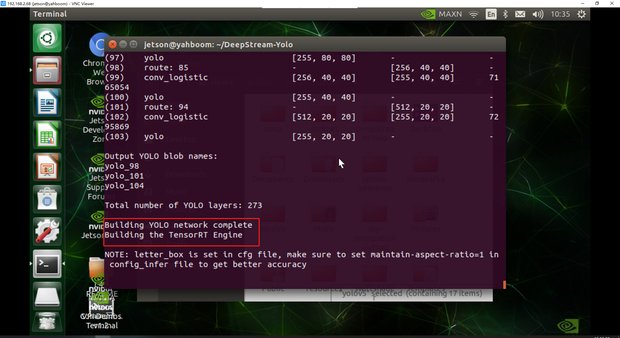

3.Compile Run

xxxxxxxxxxcd nvdsinfer_custom_impl_Yolo/CUDA_VER=11.4 make -j4 #Modify the numerical part of 11.4 based on your CUDA versioncd .. deepstream-app -c deepstream_app_config.txt

After waiting for a while, you can see that the CSI camera screen has opened

node

- If you are using your own built image, you need to

deepstream_app_config.txt Make modifications

Modify as shown in the figure:

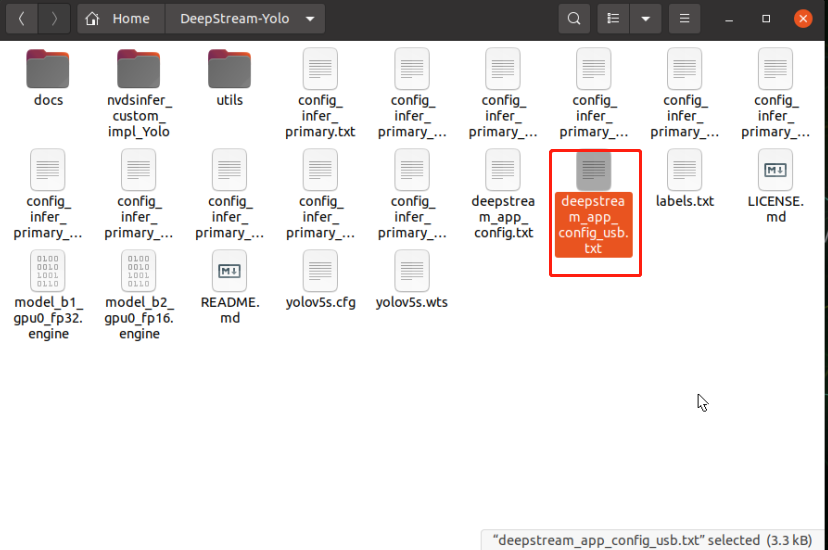

- If you are using a USB camera, you need to include the deepstream in the attachment of the document_ app_ config_ Upload the file USB. txt to Jetson and deepstream_ app_ Config.txt This file is located in the same location, as shown in the following figure:

then run

xxxxxxxxxxcd ~/DeepStream-Yolodeepstream-app -c deepstream_app_config_usb.txt

Just a moment, we can achieve the detection

deepsteram The parameter description for file configuration and related reference connections are as follows: https://blog.csdn.net/weixin_38369492/article/details/104859567