8. ORB_SLAM2 basics

8. ORB_SLAM2 basics8.1. Introduction8.2. Official case8.2.1. Monocular test8.2.2. Binocular test8.2.3, RGBD test8.3, ORB_SLAM2_ROS camera test8.3.1. Internal parameter modification8.3.2. Monocular8.3.3. Monocular AR8.3.4, RGBD

Official website: http://webdiis.unizar.es/~raulmur/orbslam/

ASL Dataset: https://projects.asl.ethz.ch/datasets/doku.php?id=kmavvisualinertialdatasets

mono Dataset: https://vision.in.tum.de/data/datasets/rgbd-dataset/download

stereo Dataset: http://robotics.ethz.ch/~asl-datasets/ijrr_euroc_mav_dataset/machine_hall/MH_01_easy/

orb_slam2_ros: http://wiki.ros.org/orb_slam2_ros

ORB-SLAM: https://github.com/raulmur/ORB_SLAM

ORB-SLAM2: https://github.com/raulmur/ORB_SLAM2

ORB-SLAM3: https://github.com/UZ-SLAMLab/ORB_SLAM3

8.1. Introduction

ORB-SLAM is mainly used for monocular SLAM;

The ORB-SLAM2 version supports three interfaces: monocular, binocular and RGBD;

The ORB-SLAM3 version adds IMU coupling and supports fisheye cameras.

All steps of ORB-SLAM use the ORB features of the image uniformly. The ORB feature is a very fast feature extraction method that is rotation invariant and can use pyramids to build scale invariance. Using unified ORB features helps the SLAM algorithm to be consistent in steps such as feature extraction and tracking, key frame selection, three-dimensional reconstruction, and closed-loop detection. The system is also robust to severe motion and supports wide-baseline closed-loop detection and relocalization, including fully automatic initialization. Since the ORB-SLAM system is a SLAM system based on feature points, it can calculate the camera's trajectory in real time and generate sparse three-dimensional reconstruction results of the scene.

On the basis of ORB-SLAM, ORB-SLAM2 contributes:

The first open source SLAM system for monocular, binocular and RGBD cameras, including loopback, relocation and map reuse.

The results of RGBD show that more accuracy can be obtained by using BA than ICP or minimization based on photometric and depth errors.

By using the far point and near point in binoculars and monocular observation, the binocular results are more accurate than the direct binocular SLAM algorithm.

Light positioning mode can effectively reuse maps.

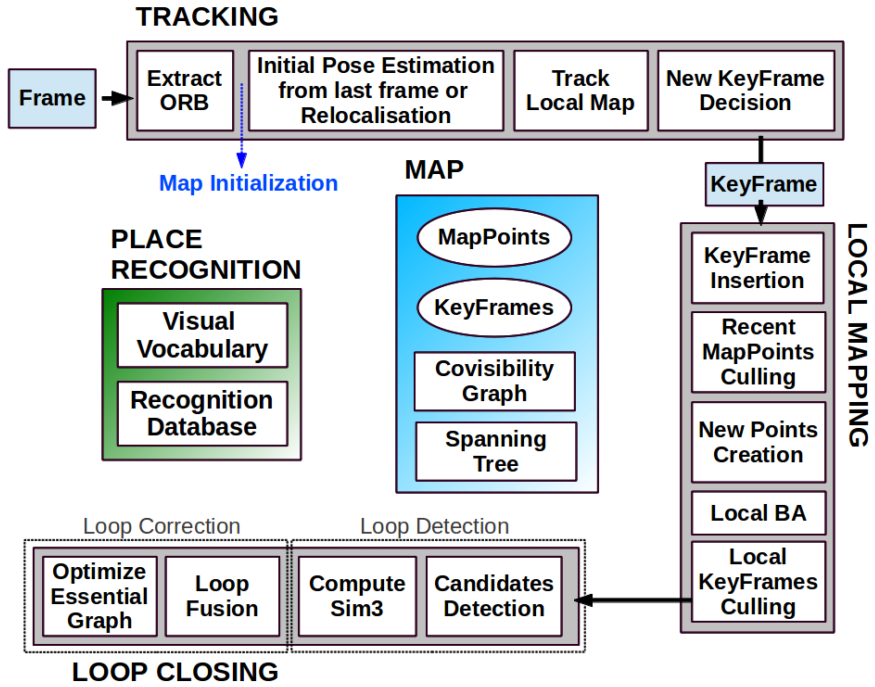

ORB-SLAM2 includes modules common to all SLAM systems: tracking, mapping, relocalization, and loop closing. . The figure below is the process of ORB-SLAM2.

8.2. Official case

Open the terminal and enter ORB_SLAM2

xxxxxxxxxx#Raspberry Pi 5 master needs to enter docker first, please perform this step#If running the script into docker fails, please refer to ROS/07, Docker tutorial~/run_docker.shxxxxxxxxxxcd ~/software/ORB_SLAM2

8.2.1. Monocular test

xxxxxxxxxx./Examples/Monocular/mono_tum Vocabulary/ORBvoc.txt Examples/Monocular/TUM3.yaml ~/software/ORB_SLAM_data/rgbd_dataset_freiburg3_long_office_household

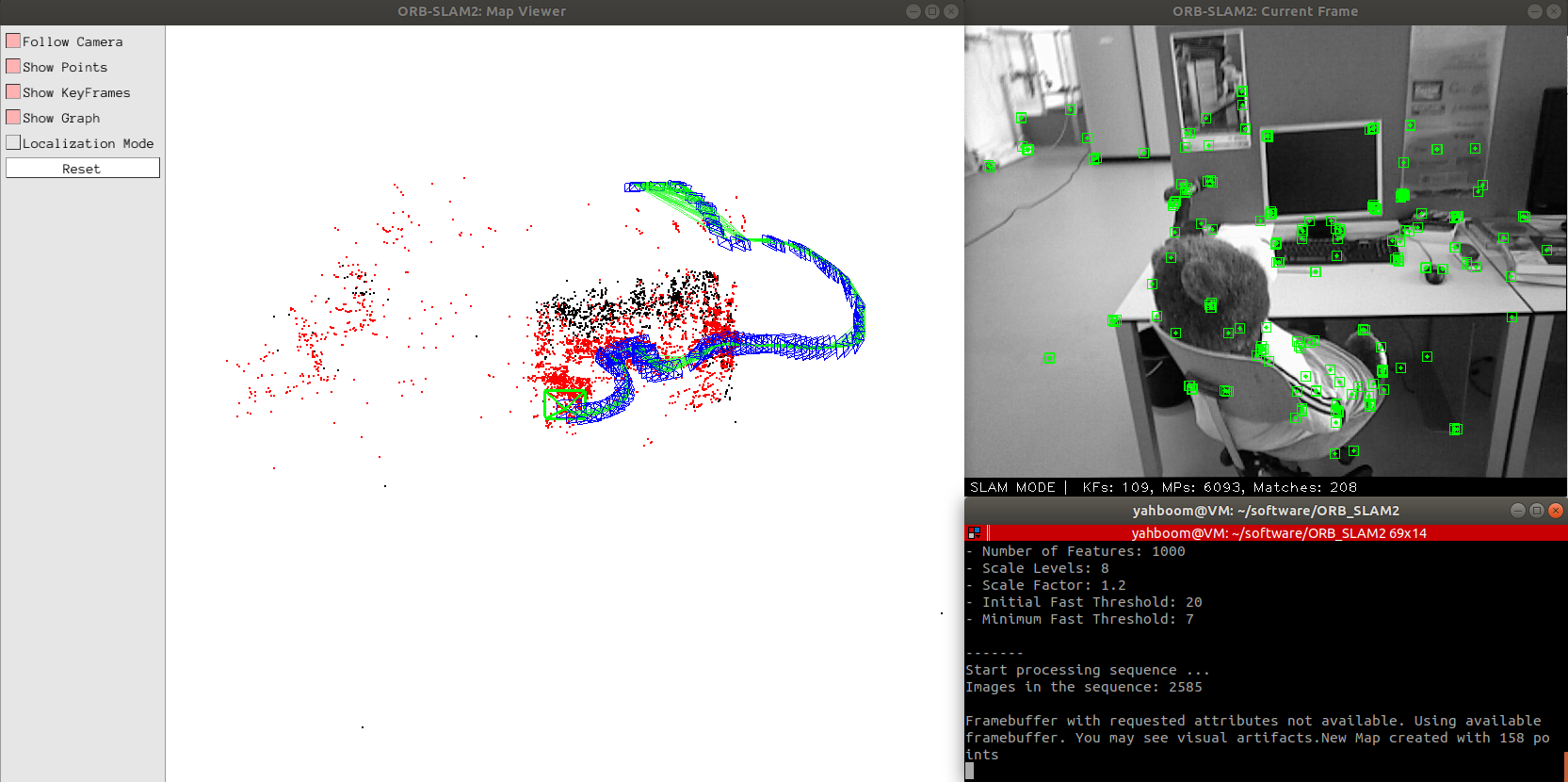

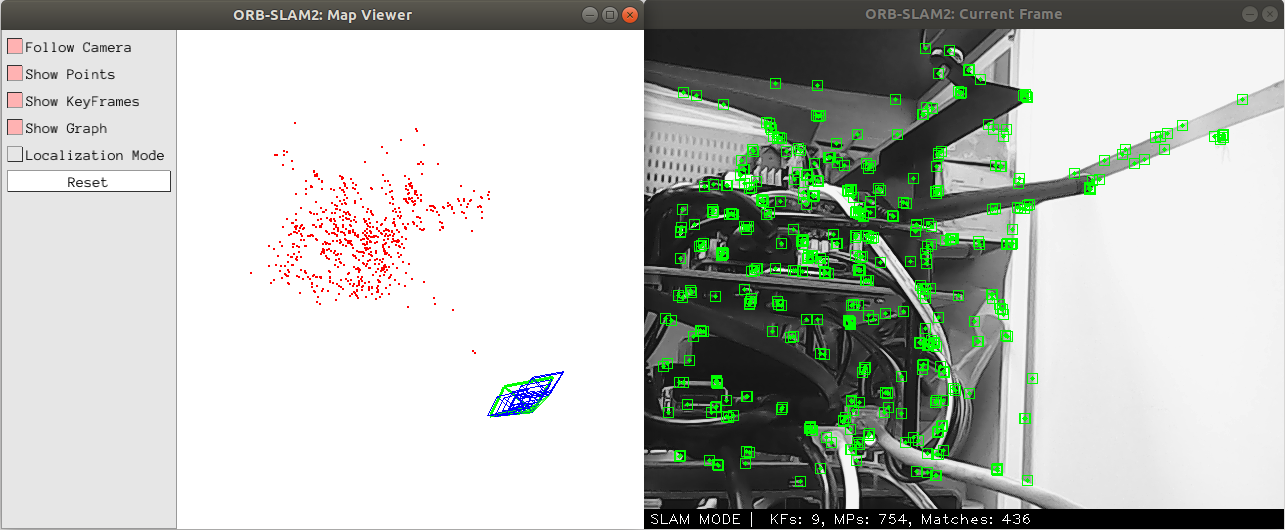

The blue frame is the key frame, the green frame is the camera orientation, the black point is the saved point, and the red point is the point currently seen by the camera.

After the test is completed, the keyframes are saved to the KeyFrameTrajectory.txt file in the current directory.

xxxxxxxxxx# Timestamp position (x y z) + attitude (x y z w)1341847980.722988 -0.0000464 0.0001060 0.0000110 -0.0000183 0.0001468 -0.0000286 1.00000008.2.2. Binocular test

xxxxxxxxxx./Examples/Stereo/stereo_euroc Vocabulary/ORBvoc.txt Examples/Stereo/EuRoC.yaml ~/software/ORB_SLAM_data/MH_01_easy/mav0/cam0/data ~/software/ORB_SLAM_data/MH_01_easy/mav0/cam1/data Examples/Stereo/EuRoC_TimeStamps /MH01.txt

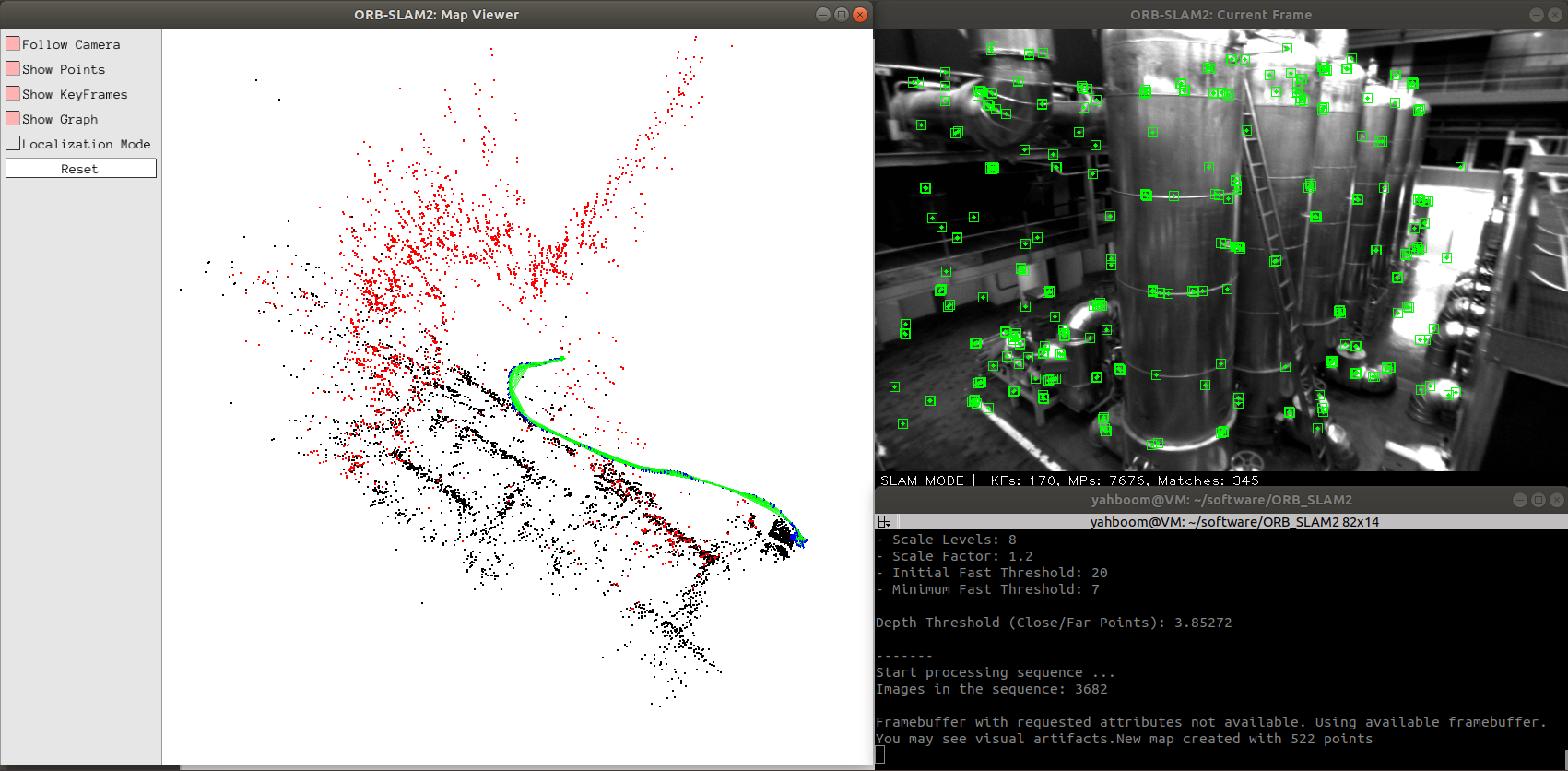

The blue frame is the key frame, the green frame is the camera orientation, the black point is the saved point, and the red point is the point currently seen by the camera.

After the test is completed, the keyframes are saved to the CameraTrajectory.txt file in the current directory.

xxxxxxxxxx# Timestamp position (x y z) + attitude (x y z w)1403636597.963556 -0.020445164 0.127641633 0.107868195 -0.136788622 -0.074876986 -0.044620439 0.9867579948.2.3, RGBD test

Merge the depth data and color image data into rgbd data and save it to the associations.txt file

xxxxxxxxxxcd ~/software/ORB_SLAM_data/rgbd_dataset_freiburg3_long_office_householdpython2 associate.py

Back to ORB_SLAM2

xxxxxxxxxxcd ~/software/ORB_SLAM2

test command

xxxxxxxxxx./Examples/RGB-D/rgbd_tum Vocabulary/ORBvoc.txt Examples/RGB-D/TUM3.yaml ~/software/ORB_SLAM_data/rgbd_dataset_freiburg3_long_office_household ~/software/ORB_SLAM_data/rgbd_dataset_freiburg3_long_office_household/associations.txt

8.3, ORB_SLAM2_ROS camera test

The internal parameters of the camera have been modified before the product leaves the factory. If you want to learn how to do this, please refer to the section [8.3.1, Modification of Internal Parameters]. It can be handheld or robot used as a mobile carrier for mobile testing.

If it is held, there is no need to execute the next command, otherwise, it will be executed. (Robot side)

xxxxxxxxxxroslaunch yahboomcar_slam camera_driver.launch

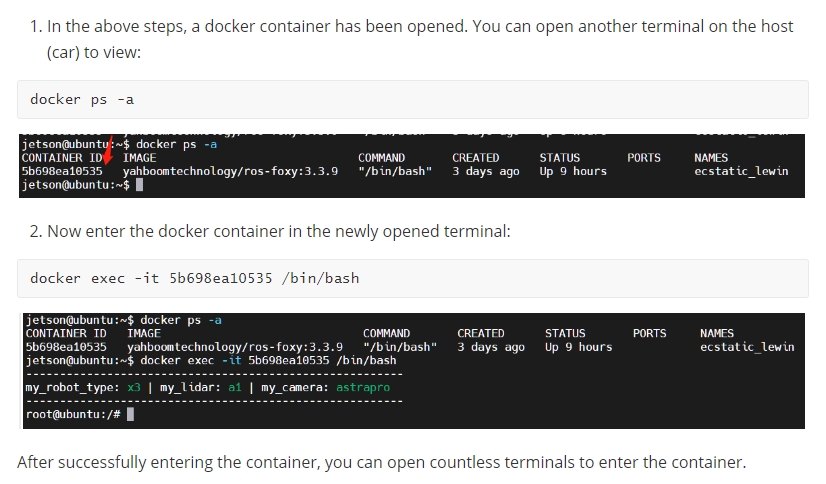

<PI5 needs to open another terminal to enter the same docker container

Start the camera ORB_SLAM2 test (Robot side or virtual machine)

xxxxxxxxxxroslaunch yahboomcar_slam camera_orb_slam.launch orb_slam_type:=mono[orb_slam_type] parameters: [mono, monoAR, rgbd], there are three types available, monocular, monocular AR, rgbd.

8.3.1. Internal parameter modification

The camera requires the internal parameters of the camera before running ORBSLAM, so the camera must be calibrated first. The specific method can be found in the lesson [02, Astra Camera Calibration].

Start monocular camera

xxxxxxxxxxroslaunch usb_cam usb_cam-test.launch

Start calibration node

xxxxxxxxxxrosrun camera_calibration cameracalibrator.py image:=/usb_cam/image_raw camera:=/usb_cam --size 9x6 --square 0.02

After calibration, move the [calibrationdata.tar.gz] file to the [home] directory.

xxxxxxxxxxsudo mv /tmp/calibrationdata.tar.gz ~

After unzipping, open [ost.yaml] in the folder and find the camera internal parameter matrix, for example: the following content.

xxxxxxxxxxcamera_matrix: rows: 3 cols: 3 data: [ 683.90304, 0. , 294.56102, 0. , 679.88513, 228.05956, 0. , 0. , 1. ]Camera internal parameter matrix

xxxxxxxxxx# fx 0 cx# 0 fy cy# 0 0 1Modify the data in data to the values corresponding to [astra.yaml] and [astra1.0.yaml] in the [param] folder under the [yahboomcar_slam] function package.

xxxxxxxxxxCamera.fx: 683.90304Camera.fy: 679.88513Camera.cx: 294.56102Camera.cy: 228.059568.3.2. Monocular

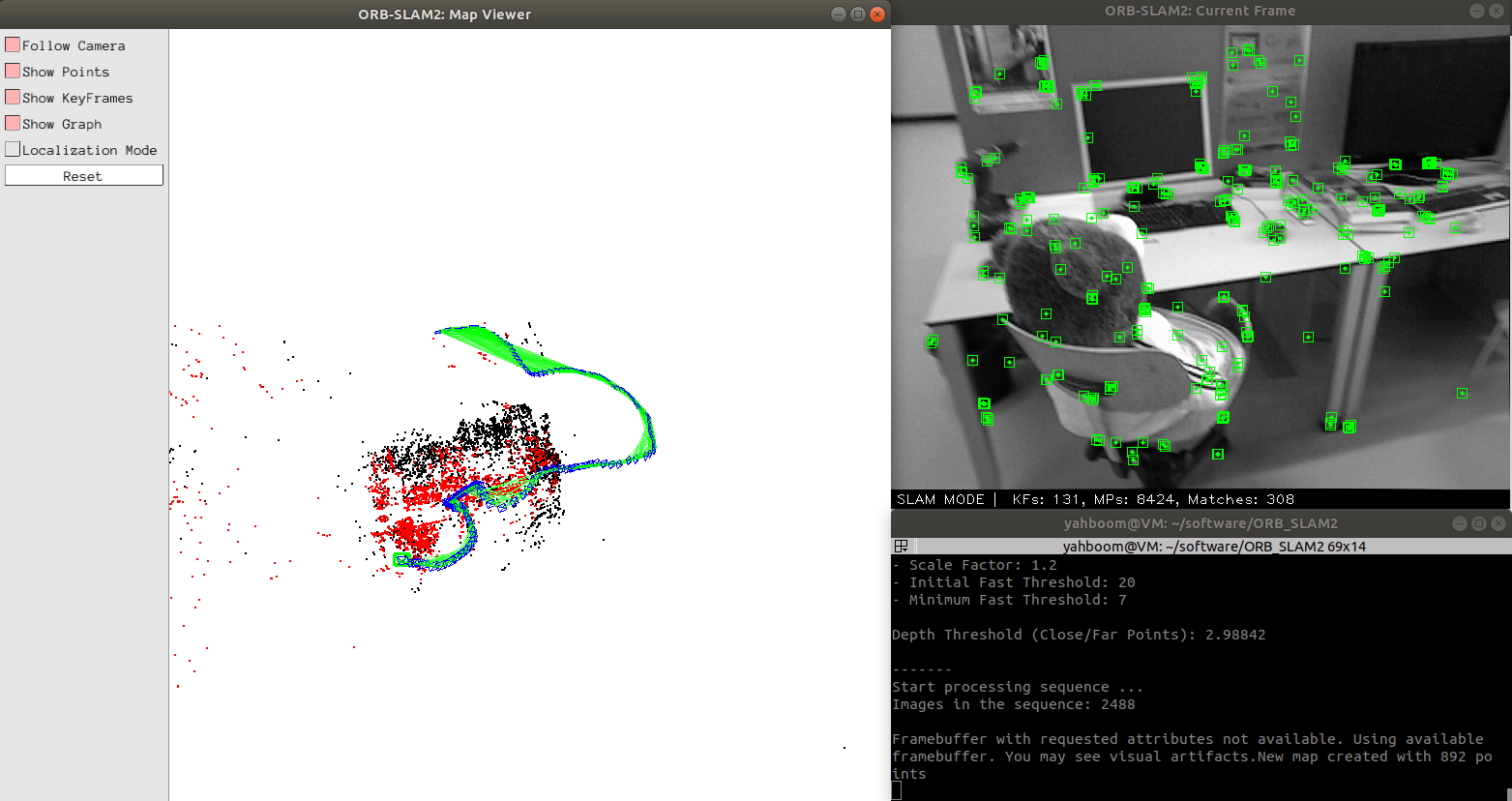

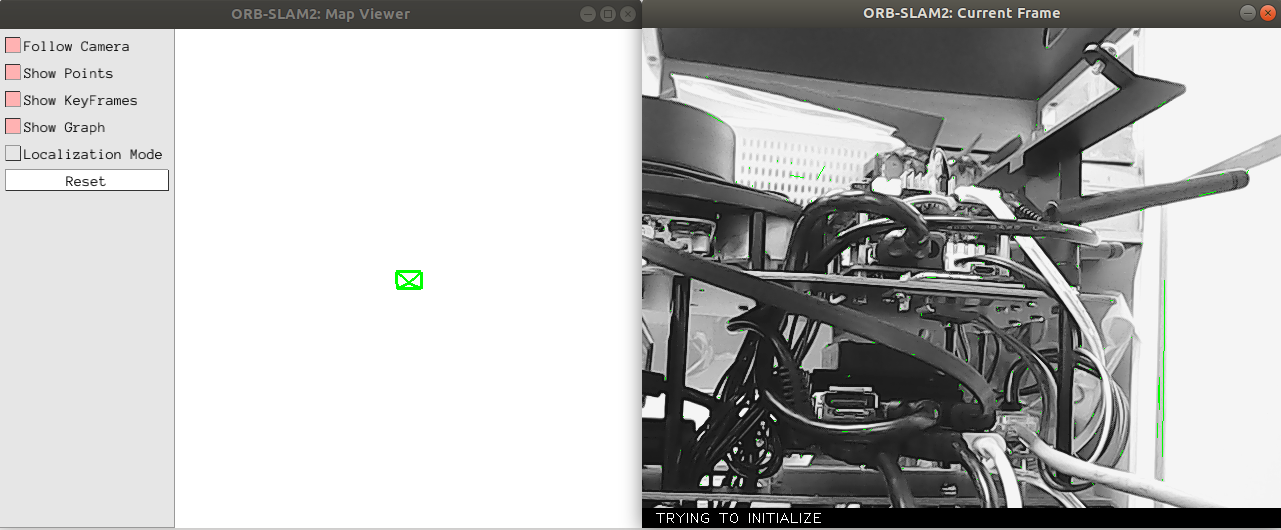

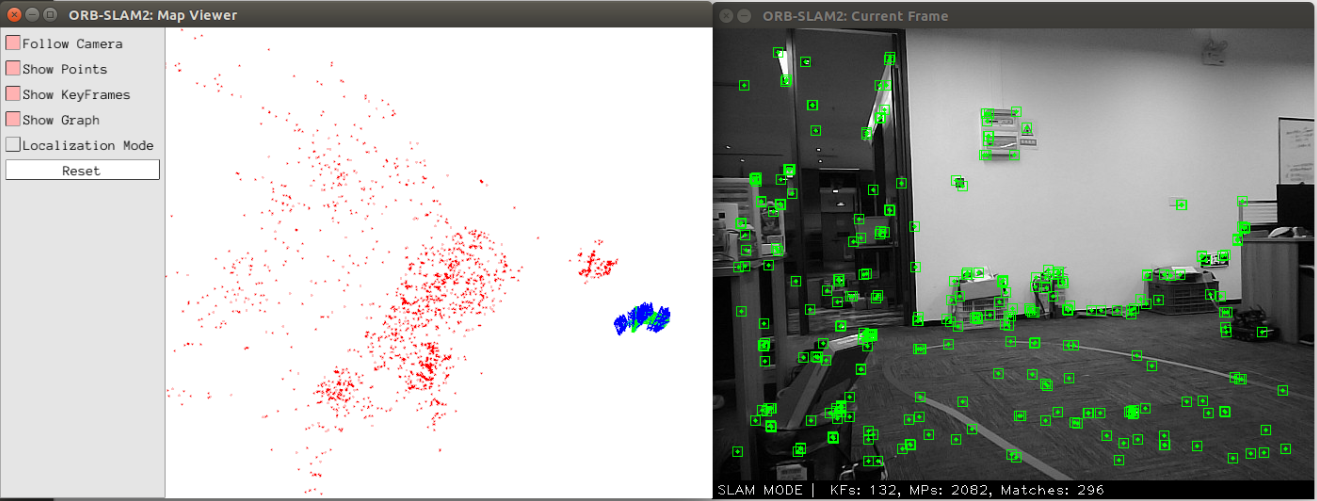

When the command is executed, there is only a green box in the [ORB_SLAM2:Map Viewer] interface, and the [ORB_SLAM2:Current Frame] interface is trying to initialize. At this time, slowly move the camera up, down, left, and right to find feature points in the screen and initialize slam. .

As shown in the picture above, when you enter the [SLAM MODE] mode, you must continuously acquire each frame of image to position the camera when running the monocular. If you select the pure positioning mode of [Localization Mode] in the upper left picture, the camera will not be able to find its own position. You have to start over to get the keyframes.

8.3.3. Monocular AR

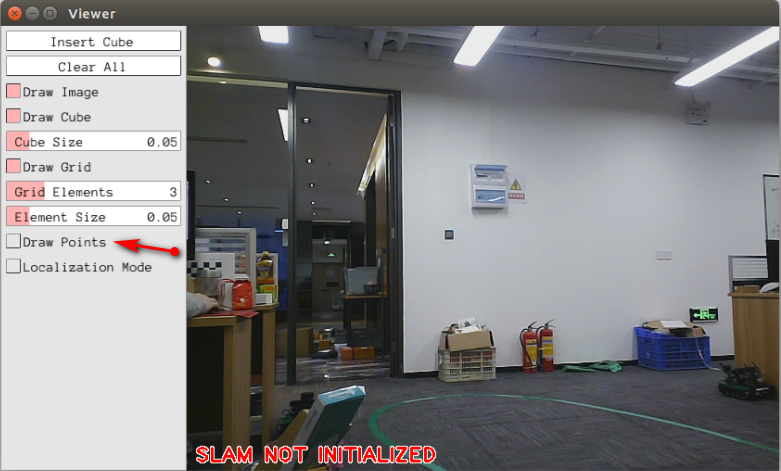

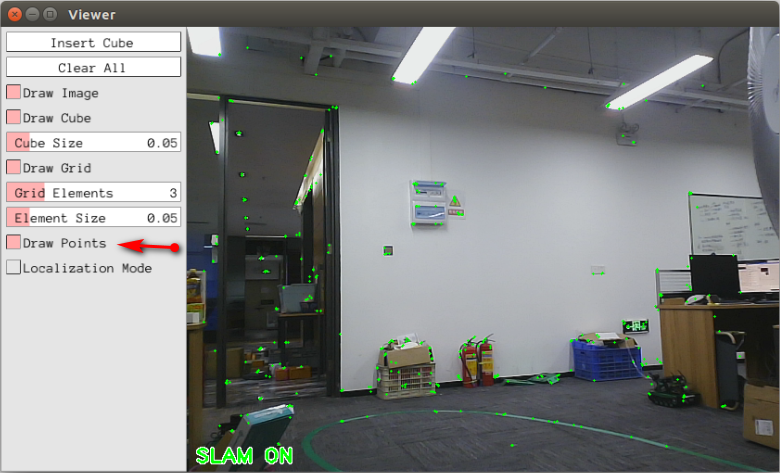

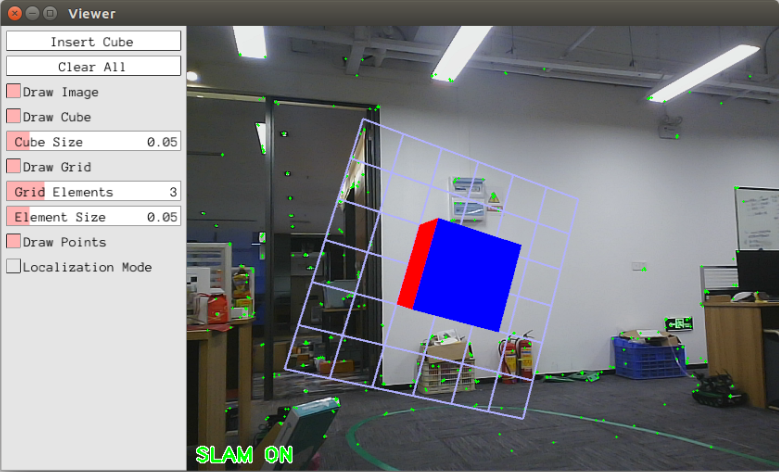

When the command is executed, there is only one interface and [slam not initialized] is displayed. slam is not initialized. Click the box to the left of [Draw Points] in the left column to display feature points. At this time, slowly move the camera up, down, left, and right to find feature points in the picture and initialize slam.

As shown in the picture above, enter [SLAM ON] mode at this time. Click [Insert Cube] on the screen to insert an AR cube where it is considered to be a plane. And the AR block will always be in a fixed position in the scene.

, not a fixed position on the camera screen. Click [Clear All] to clear.

8.3.4, RGBD

RGBD does not have to continuously acquire each frame of image like running a monocular. If you select the pure positioning mode [Localization Mode] in the upper left picture, you can position the key frame just acquired.