9. Cartographer mapping algorithm

9. Cartographer mapping algorithm9.1. Introduction9.2. Use9.2.1. Start9.2.2. Controlling the robot9.2.3. Map saving9.2.4. Node View9.2.5. View tf tree9.3. Configuration parameters

Cartographer: https://google-cartographer.readthedocs.io/en/latest/

Cartographer ROS: https://google-cartographer-ros.readthedocs.io/en/latest/

map_server: https://wiki.ros.org/map_server

9.1. Introduction

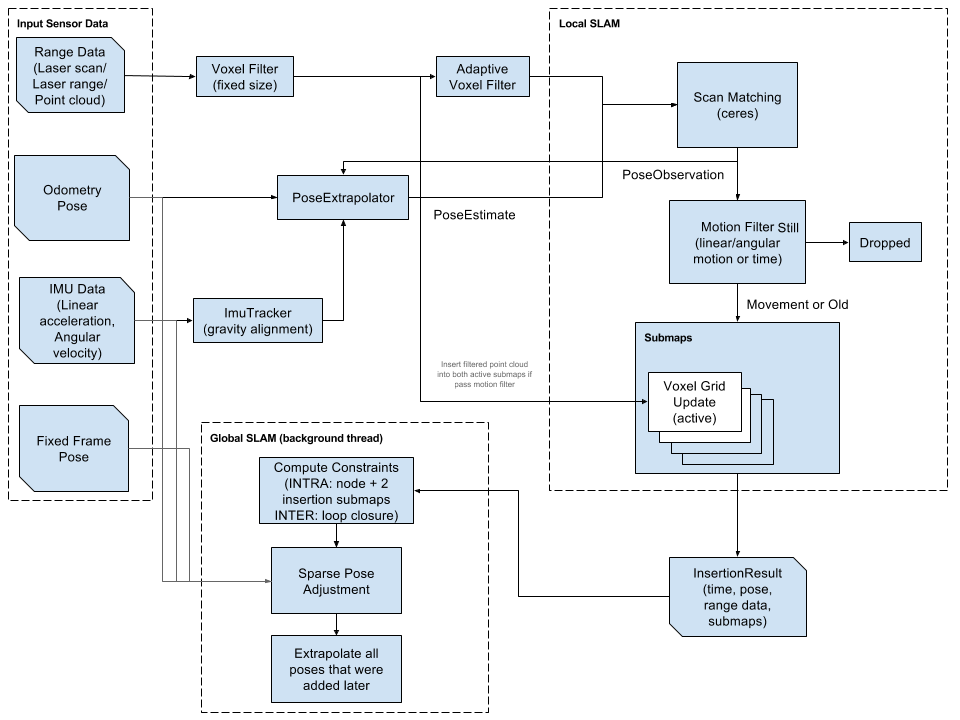

Cartographer is a 2D and 3D SLAM (simultaneous localization and mapping) library supported by Google's open source ROS system. A graph-building algorithm based on graph optimization (multi-threaded backend optimization, problem optimization of ceiling construction). Data from multiple sensors (such as LIDAR, IMU, and cameras) can be combined to simultaneously calculate the sensor's position and map the environment around the sensor.

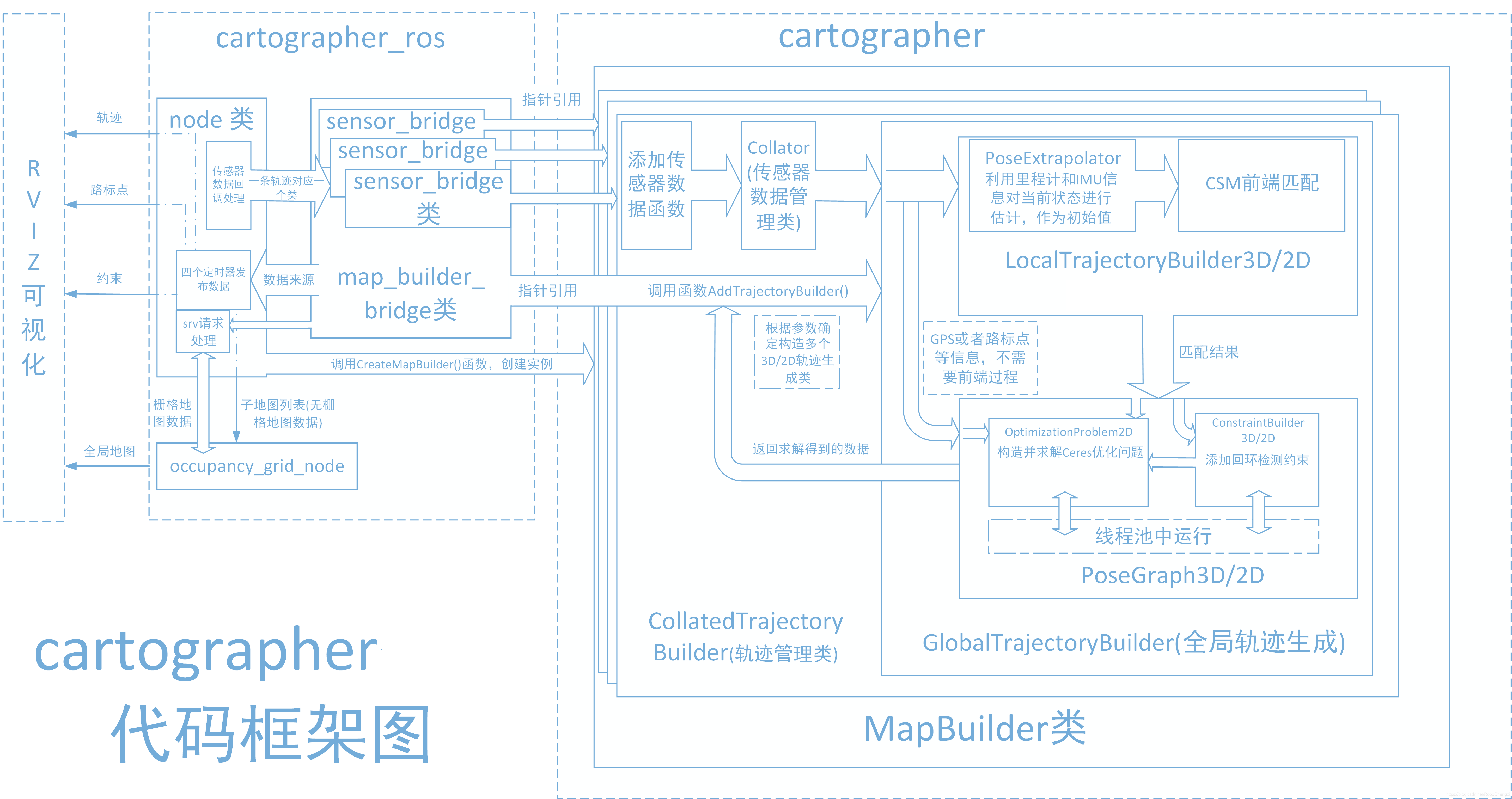

The source code of cartographer mainly includes three parts: cartographer, cartographer_ros and ceres-solver (back-end optimization).

cartographer

Cartographer uses the mainstream SLAM framework, which is a three-stage structure of feature extraction, closed-loop detection, and back-end optimization. A certain number of LaserScans form a submap, and a series of submaps constitute the global map. The cumulative error in the short-term process of using LaserScan to construct a submap is not large, but the long-term process of using a submap to construct a global map will have a large cumulative error. Therefore, closed-loop detection is needed to correct the positions of these submaps. The basic unit of closed-loop detection is submap, closed-loop detection uses the scan_match strategy. The focus of cartographer is the creation of submap subgraphs that integrate multi-sensor data (odometry, IMU, LaserScan, etc.) and the implementation of the scan_match strategy for closed-loop detection.

cartographer_ros

The cartographer_ros package runs under ROS and can accept various sensor data in the form of ROS messages. After processing, it can be published in the form of messages to facilitate debugging and visualization.

9.2. Use

Note: When building a map, the slower the speed, the better the effect (note that the rotation speed should be slower). If the speed is too fast, the effect will be poor.

According to different models, you only need to set the purchased model in [.bashrc], X1 (normal four-wheel drive) X3 (Mailun) Take X3 as an example

xxxxxxxxxx#Raspberry Pi 5 master needs to enter docker first, please perform this step#If running the script into docker fails, please refer to ROS/07, Docker tutorial~/run_docker.shOpen the [.bashrc] file

xxxxxxxxxxsudo vim .bashrc

Find the [ROBOT_TYPE] parameters and modify the corresponding car model

xxxxxxxxxxexport ROBOT_TYPE=X3 # ROBOT_TYPE: X1 X3 X3plus R2 X7To replace the configuration file, copy the launch file and lua file required by cartographer to the specified directory. It is configured before leaving the factory.

xxxxxxxxxxsudo bash ~/yahboomcar_ws/src/yahboomcar_nav/scripts/copy_carto.sh

9.2.1. Start

Start the command (robot side). For the convenience of operation, this section takes [mono + laser + yahboomcar] as an example.

xxxxxxxxxxroslaunch yahboomcar_nav laser_bringup.launch # laser + yahboomcarroslaunch yahboomcar_nav laser_usb_bringup.launch # mono + laser + yahboomcarroslaunch yahboomcar_nav laser_astrapro_bringup.launch # Astra + laser + yahboomcarMapping command (robot side)

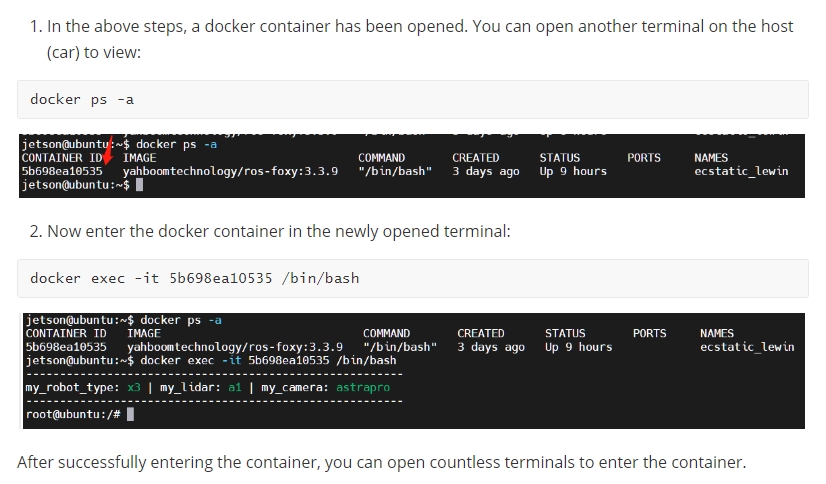

<PI5 needs to open another terminal to enter the same docker container

xxxxxxxxxxroslaunch yahboomcar_nav yahboomcar_map.launch use_rviz:=false map_type:=cartographer[use_rviz] parameter: whether to enable rviz visualization.

[map_type] parameter: Set the mapping algorithm [cartographer].

Turn on the visual interface (virtual machine side)

xxxxxxxxxxroslaunch yahboomcar_nav view_cartographer.launch

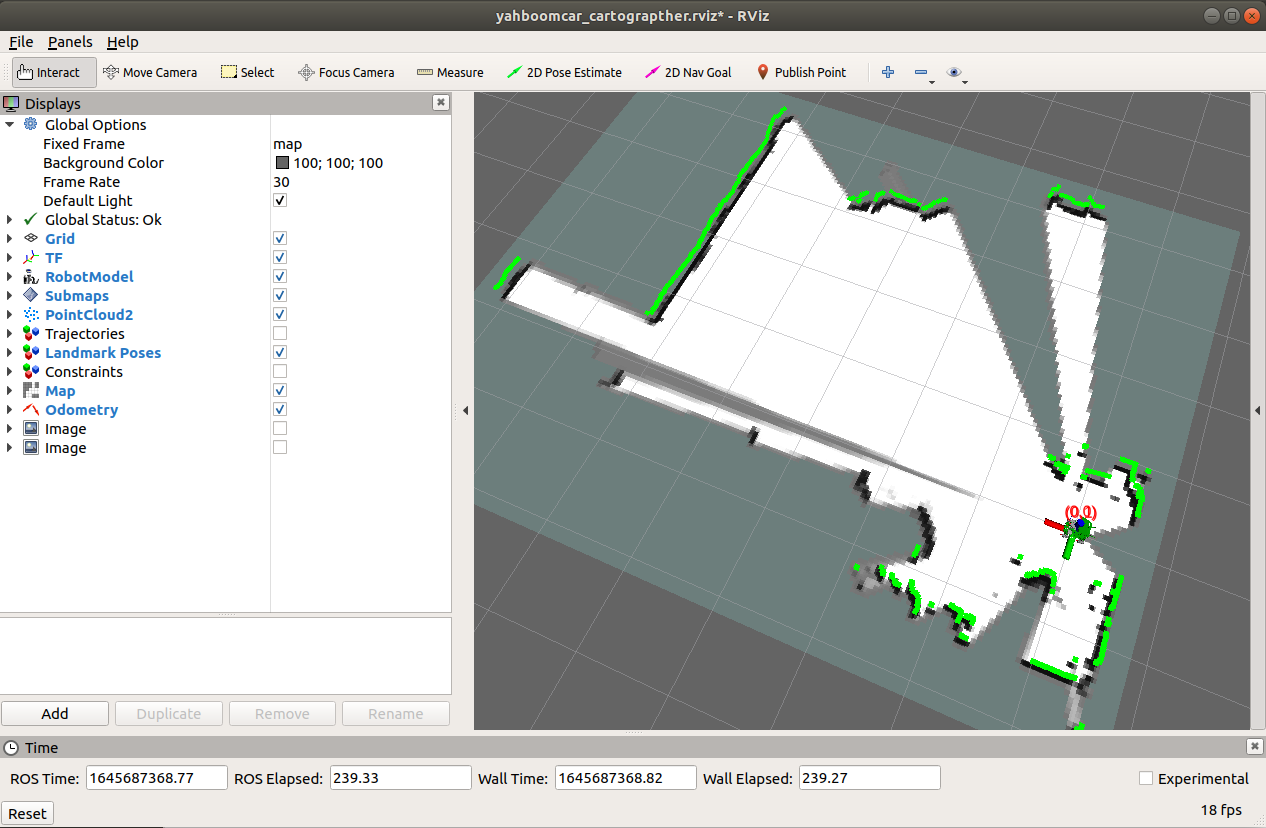

The gap at the back of the robot is due to the obstruction caused by the installation position of the display screen, so a certain range of radar data is blocked. The shielding range can be adjusted, or it can not be blocked according to the actual situation. For specific operations, see [01. Radar Basic Course].

9.2.2. Controlling the robot

Keyboard controls robot movement

xxxxxxxxxxrosrun teleop_twist_keyboard teleop_twist_keyboard.py # System integrationroslaunch yahboomcar_ctrl yahboom_keyboard.launch # CustomControl the robot movement with the handle

Make the robot cover the area to be mapped and the map should be as closed as possible.

There may be some scattered points during the mapping process. If the mapping environment is well closed, relatively regular, and the movement is slow, the scattering phenomenon will be much smaller.

9.2.3. Map saving

xxxxxxxxxxbash ~/yahboomcar_ws/src/yahboomcar_nav/maps/carto_map.shThe map will be saved to the ~/yahboomcar_ws/src/yahboomcar_nav/maps/ folder, a pgm image and a yaml file.

map.yaml

xxxxxxxxxximage: map.pgmresolution: 0.05origin: [-15.4,-12.2,0.0]Negate: 0occupied_thresh: 0.65free_thresh: 0.196Parameter analysis:

image: The path of the map file, which can be an absolute path or a relative path.

resolution: resolution of the map, meters/pixel

Origin: 2D pose (x, y, yaw) in the lower left corner of the map. The yaw here is rotated counterclockwise (yaw=0 means no rotation). Many parts of the current system ignore the yaw value.

negate: whether to reverse the meaning of white/black and free/occupied (the interpretation of the threshold is not affected)

occupied_thresh: Pixels with an occupation probability greater than this threshold will be considered fully occupied.

free_thresh: Pixels with occupancy probability less than this threshold will be considered completely free.

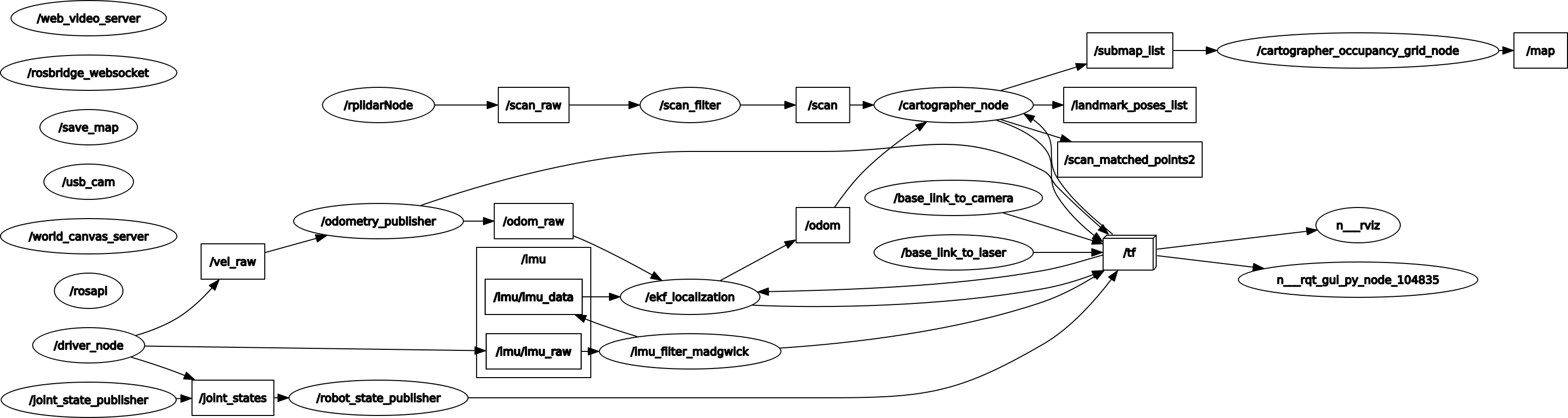

9.2.4. Node View

xxxxxxxxxxrqt_graph

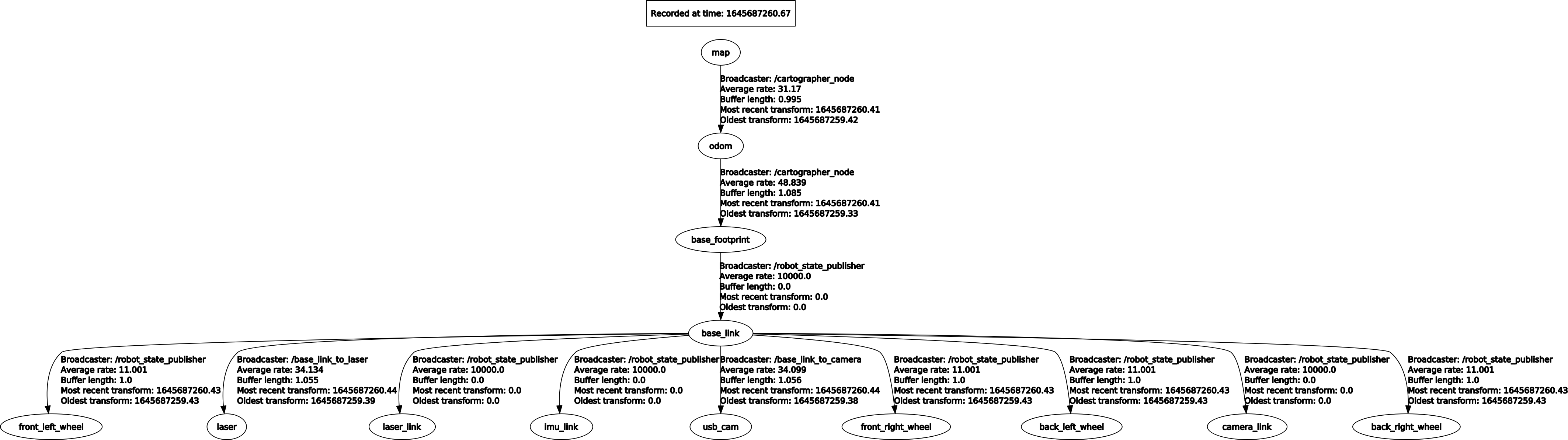

9.2.5. View tf tree

xxxxxxxxxxrosrun rqt_tf_tree rqt_tf_tree

9.3. Configuration parameters

lua file

| Parameters | Description |

|---|---|

| map_frame | map coordinate system |

| tracking_frame | Convert all sensor data to this coordinate system |

| published_frame | The coordinate system pointed to by map |

| odom_frame | Do you provide odom's tf? If it is true, the tf tree is map->odom->footprint; if it is false, the tf tree is map->footprint |

| provide_odom_frame | If true, the local, non-loop-closed, continuous pose will be published as odom_frame in map_frame? |

| publish_frame_projected_to_2d | If enabled, published poses will be restricted to pure 2D poses (no roll, pitch or z-offset) |

| use_odometry | Whether to use odometer, if required, you must have odom tf |

| use_nav_sat | Whether to use gps |

| use_landmarks | Whether to use landmarks |

| num_laser_scans | Whether to use single-line laser data |

| num_multi_echo_laser_scans | Whether to use multi_echo_laser_scans data |

| num_subdivisions_per_laser_scan | 1 frame of data is divided into several times for processing, usually 1 |

| num_point_clouds | Whether to use point cloud data |

| lookup_transform_timeout_sec | Timeout when looking up tf |

| submap_publish_period_sec | Time interval for publishing submap (seconds) |

| pose_publish_period_sec | The time interval for publishing pose, when the value is 5e-3, it is 200HZ |

| trajectory_publish_period_sec | The time interval for publishing trajectory markers (trajectory nodes), the value is 30e-3 is 30ms |

| rangefinder_sampling_ratio | Fixed sampling frequency for lidar messages |

| odometry_sampling_ratio | Fixed sampling frequency of odometry messages |

| fixed_frame_pose_sampling_ratio | Fixed sampling frequency of fixed coordinate system messages |

| imu_sampling_ratio | Fixed sampling frequency of IMU messages |

| landmarks_sampling_ratio | Fixed sampling frequency of landmark messages |