6. Voice control color tracking

6.1. Function description

By interacting with the voice recognition module on ROSMASTER, you can turn on or off ROSMASTER's red/blue/green/yellow color tracking function by voice. The R2 key on the handle can cancel/enable this function at any time.

6.2. Start

xxxxxxxxxx#Raspberry Pi 5 master needs to enter docker first, please perform this step#If running the script into docker fails, please refer to ROS/07, Docker tutorial~/run_docker.sh6.2.1. Function package path

xxxxxxxxxx~/yahboomcar_ws/src/yahboomcar_voice_ctrl/

6.2.2. Start

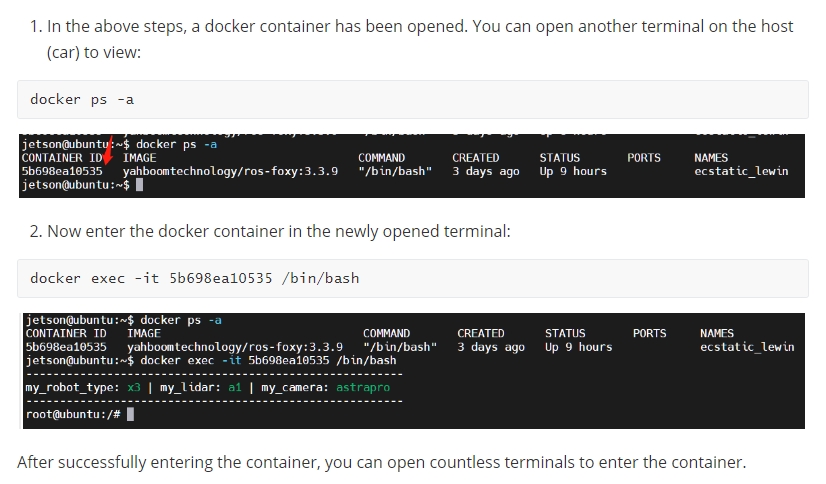

xxxxxxxxxxroslaunch yahboomcar_voice_ctrl voice_ctrl_colorTracker.launch<PI5 needs to open another terminal to enter the same docker container

xxxxxxxxxxpython ~/yahboomcar_ws/src/yahboomcar_voice_ctrl/scripts/voice_Ctrl_color_tracker.py

After the program is started, call ROSMASTER "Hello, Xiaoya" to wake up the module. When it broadcasts "on", it means waking up the module. Taking tracking red as an example, you can then say "Start tracking red" to it, and ROSMASTER will broadcast "Okay, start tracking red". Then, we release the controller's control of ROSMASTER by pressing the R2 key of the controller, and ROSMASTER begins to track red. If there is no remote control, you can also enter the following command through the terminal,

xxxxxxxxxxrostopic pub /JoyState std_msgs/Bool False

If you want to cancel the color tracking function, say "cancel tracking" to ROSMASTER, ROSMASTER stops, and the voice will broadcast "OK, cancel tracking".

6.2.3. Color calibration

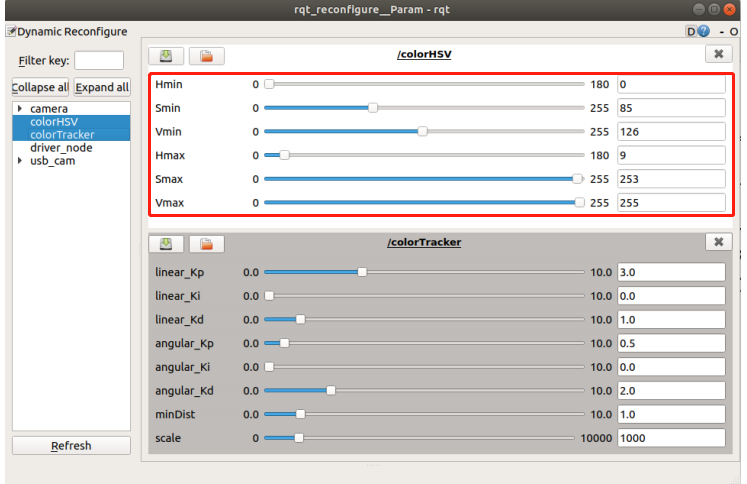

The camera is very sensitive to light, so sometimes the color recognition will be inaccurate. In this case, the red, green, yellow, and blue colors need to be recalibrated. Terminal input,

xxxxxxxxxxrosrun rqt_reconfigure rqt_reconfigure

Find the colorHSV column and drag the slider to modify the HSV value.

Open the voice_Ctrl_color_tracker.py program and find the following sections,

xxxxxxxxxxif command_result == 73 : self.model = "color_follow_line" print("tracker red") self.hsv_range = [(0, 185, 175), (180, 253, 255)]elif command_result == 74 : self.model = "color_follow_line" print("tracker green") self.hsv_range = [(54, 92, 75), (125, 255, 255)]elif command_result == 75 : self.model = "color_follow_line" print("tracker bule") self.hsv_range = [(55, 204, 177), (125, 253, 255)]elif command_result == 72 : self.model = "color_follow_line" print("tracker yellow") self.hsv_range = [(18, 128, 168), (125, 253, 255)]Modify the calibrated HSV value just recorded to the position of the corresponding color, save it, and use the calibrated value the next time you start it.

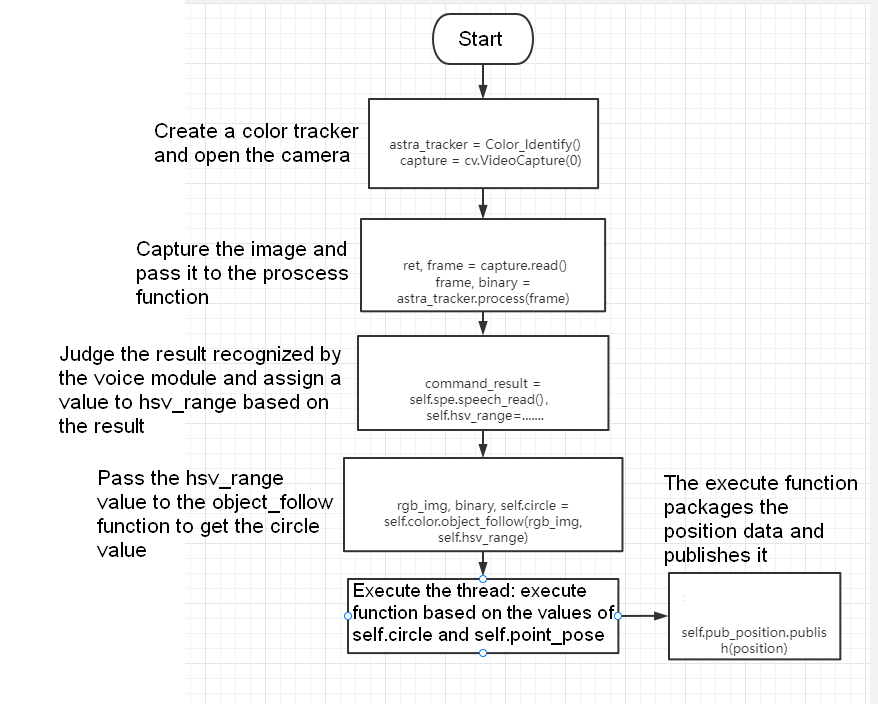

6.3. Core code analysis voice_Ctrl_color_tracker.py

xxxxxxxxxxcommand_result = self.spe.speech_read() self.spe.void_write(command_result) if command_result == 73 : self.model = "color_follow_line" print("tracker red") self.hsv_range = [(20, 215, 111), (180, 253, 255)] self.dyn_update = True elif command_result == 74 : self.model = "color_follow_line" print("tracker green") self.hsv_range = [(44, 138, 91), (84, 255, 255)] self.dyn_update = True elif command_result == 75 : self.model = "color_follow_line" print("tracker bule") self.hsv_range = [(83, 217, 196), (141, 253, 255)] self.dyn_update = True elif command_result == 72 : self.model = "color_follow_line" print("tracker yellow") self.hsv_range = [(18, 55, 187), (81, 253, 255)] self.dyn_update = True elif command_result == 76 : self.model = "Stop" #self.ros_ctrl.Joy_active == False #self.ros_ctrl.pub_cmdVel.publish(Twist()) self.command_result = 999 if self.dyn_update == True : params = {'Hmin': self.hsv_range[0][0], 'Hmax': self.hsv_range[1][0], 'Smin': self.hsv_range[0][1], 'Smax': self.hsv_range[1][1], 'Vmin': self.hsv_range[0][2], 'Vmax': self.hsv_range[1][2]} self.dyn_client.update_configuration(params) self.dyn_update = False if self.model == "color_follow_line": self.ros_ctrl.Joy_active == False #self.model == "General" rgb_img, binary, self.circle = self.color.object_follow(rgb_img, self.hsv_range) if self.ros_ctrl.Joy_active == False : if self.circle[2] != 0: threading.Thread( target=self.execute, args=(self.circle[0], self.circle[1], self.circle[2])).start() if self.point_pose[0] != 0 and self.point_pose[1] != 0: threading.Thread( target=self.execute, args=(self.point_pose[0], self.point_pose[1], self.point_pose[2])).start() #threading.Thread(target=self.execute, args=(self.circle[0], self.circle[2])).start() return rgb_img, binarydef execute(self, x, y, z):position = Position()position.angleX = xposition.angleY = yposition.distance = zself.pub_position.publish(position)6.3.1. Program flow chart

For complete code, please refer to:

xxxxxxxxxx~/yahboomcar_ws/src/yahboomcar_voice_ctrl/scripts/voice_Ctrl_color_tracker.py6.4. Function module communication table

| Function words | Speech recognition module results | Voice broadcast content |

|---|---|---|

| Start tracking yellow | 72 | OK, start tracking yellow |

| Start tracking red | 73 | OK, start tracking red |

| Start tracking green | 74 | OK, start tracking green |

| Start tracking blue | 75 | OK, start tracking blue |

| Cancel Tracking | 76 | OK, Cancel Tracking |