Gesture Recognition

1. Gesture recognition instructions

The gesture recognition used in this routine is based on the service of Baidu Intelligent Cloud Platform. This service has 50,000 free use opportunities every day. It is only for learning and not for commercial purposes. If you need long-term use, please purchase related services. Our company No responsibility is assumed.

Request API description. Official API address reference: https://cloud.baidu.com/doc/BODY/s/ajwvxyyn0. After opening the official API address with a browser, log in to your Baidu account in the upper right corner. If you do not have a Baidu account, please register first.

After logging in, click ‘Products’ - ‘Artificial Intelligence’ - ‘Face and Human Body Recognition’ - ‘Human Body Analysis’ on the menu bar.

Click ‘Gesture Recognition’ in the product list.

Click to use now

Next, you will automatically enter the background management of human body analysis, click Create Application

After filling in the relevant information, click Create Now. After the creation is completed, click the application list to see the information of the just created application. At this time, copy and save the information under AppID, API Key and Secret Key.

Note: You need to get the free identification quota here. You can select all the functions when you get it

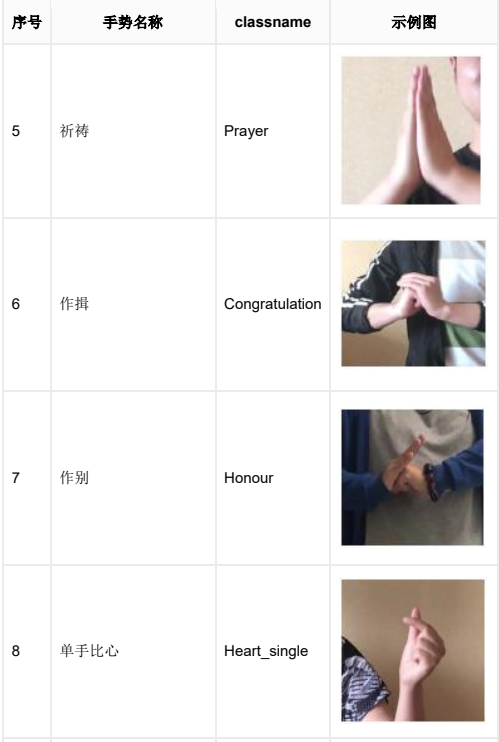

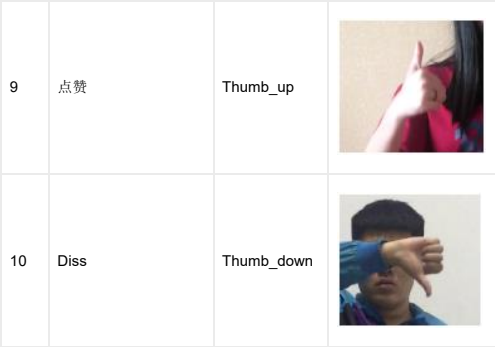

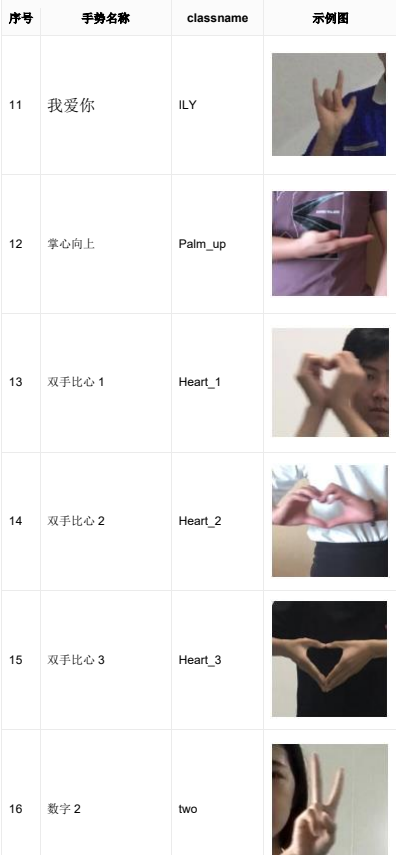

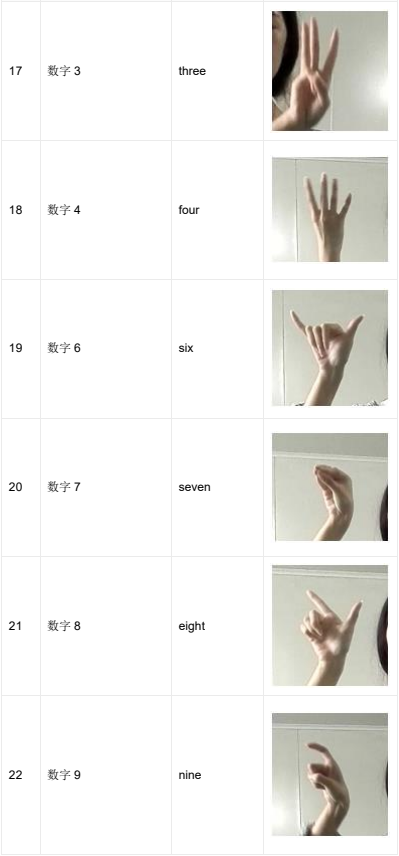

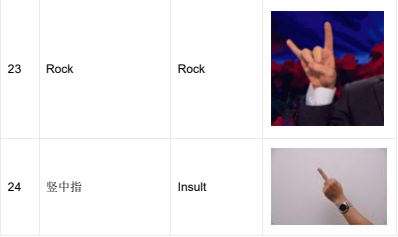

Gestures supported by gesture recognition and example images:

API functions

from aip import AipBodyAnalysis""" Your APPID AK SK """APP_ID = 'Your App ID'API_KEY = 'Your Api Key'SECRET_KEY = 'Your Secret Key'client = AipBodyAnalysis(APP_ID, API_KEY, SECRET_KEY)""" Read pictures """def get_file_content(filePath):with open(filePath, 'rb') as fp:return fp.read()image = get_file_content('example.jpg')""" Call gesture recognition """Res = client.gesture(image);

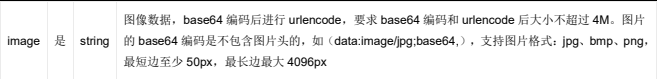

Gesture recognition return data parameter details:

Gesture recognition return example:

xxxxxxxxxx{"log_id": 4466502370458351471,"result_num": 2,"result": [{"probability": 0.9844077229499817,"top": 20,"height": 156,"classname": "Face","width": 116,"left": 173},{"probability": 0.4679304957389832,"top": 157,"height": 106,"classname": "Heart_2","width": 177,"left": 183}}

2. Main code

Start Docker

After entering the Raspberry Pi 5 desktop, open a terminal and run the following command to start the container corresponding to Dofbot:

xxxxxxxxxx./Docker_Ros.sh

Access Jupyter Lab within Docker:

xxxxxxxxxxIP:9999 // Example: 192.168.1.11:9999

Code path:/root/Dofbot/6.AI_Visuall/4.Gesture recognition.ipynb

To import Baidu API, the important thing is to change the secret key to the one you applied for.

ximport cv2import timeimportdemjsonimport pygamefrom aip import AipBodyAnalysisfrom aip import AipSpeechfrom PIL import Image, ImageDraw, ImageFontimport numpyimport ipywidgets.widgets as widgets

# For specific gestures, please see the official https://ai.baidu.com/ai-doc/BODY/4k3cpywrv#Please refer to the official information for specific gestures https://ai.baidu.com/ai-doc/BODY/4k3cpywrvhand={'One':'Number 1','Two':'Number 2','Three':'Number 3','Four':'Number 4', 'Five':'Number 5', 'Six':'Number 6', 'Seven':'Number 7', 'Eight':'Number 8','Nine':'Number 9','Fist':'Fist','Ok':'OK', 'Prayer':'prayer','Congratulation':'worship','Honour':'farewell', 'Heart_single':'Comparison','Thumb_up':'Like','Thumb_down':'Diss', 'ILY':'I love you','Palm_up':'Palm up','Heart_1':'Hands to heart 1', 'Heart_2':'Hands to heart 2','Heart_3':'Hands to heart 3','Rock':'Rock', 'Insult':'Middle finger','Face':'face'}

# The following keys should be replaced with your own#The key below needs to be replaced with one's own""" HUMAN ANALYSIS APPID AK SK """APP_ID = '31069241'API_KEY = 'pxVueLwdAGX4dafYeLsLdZa1'SECRET_KEY = 'VsDmfGRlWGqzGhcWowoCT5km4TG4Gylq'

client = AipBodyAnalysis(APP_ID, API_KEY, SECRET_KEY)

g_camera = cv2.VideoCapture(0)g_camera.set(3, 640)g_camera.set(4, 480)g_camera.set(5, 30) #Set frame rateg_camera.set(cv2.CAP_PROP_FOURCC, cv2.VideoWriter.fourcc('M', 'J', 'P', 'G'))g_camera.set(cv2.CAP_PROP_BRIGHTNESS, 40) #Set brightness -64 - 64 0.0g_camera.set(cv2.CAP_PROP_CONTRAST, 50) #Set contrast -64 - 64 2.0g_camera.set(cv2.CAP_PROP_EXPOSURE, 156) #Set exposure value 1.0 - 5000 156.0

ret, frame = g_camera.read()

Camera display component

xxxxxxxxxximage_widget = widgets.Image(format='jpeg', width=600, height=500) #Set the camera display componentdisplay(image_widget)image_widget.value = bgr8_to_jpeg(frame)Main display and display results program.

xxxxxxxxxxtry:

while True: """1. Take photos """ ret, frame = g_camera.read()

#image = get_file_content('./image.jpg')

""" 2. Call gesture recognition """ raw = str(client.gesture(image_widget.value)) text = demjson.decode(raw) try: res = text['result'][0]['classname'] except: # print('Recognition result: Nothing was recognized~' ) # img = cv2ImgAddText(frame, "Unrecognized", 250, 30, (0, 0, 255), 30) img = frame else: # print('Recognition result: ' + hand[res]) # img = cv2ImgAddText(frame, hand[res], 250, 30, (0, 255, 0), 30) if res == 'Prayer': # 1 Prayer print('Recognition result: ' + hand[res]) img = cv2ImgAddText(frame, hand[res], 250, 30, (0, 255, 0), 30) elif res == 'Thumb_up':# 2 Likes print('Recognition result: ' + hand[res]) img = cv2ImgAddText(frame, hand[res], 250, 30, (0, 255, 0), 30) elif res == 'Ok': # 3 OK print('Recognition result: ' + hand[res]) img = cv2ImgAddText(frame, hand[res], 250, 30, (0, 255, 0), 30) elif res == 'Heart_single': # 4 Compare your heart with one hand print('Recognition result: ' + hand[res]) img = cv2ImgAddText(frame, hand[res], 250, 30, (0, 255, 0), 30) elif res == 'Five': # 5 number 5 print('Recognition result: ' + hand[res]) img = cv2ImgAddText(frame, hand[res], 250, 30, (0, 255, 0), 30) elif res == "Eight": # Number 8 print('Recognition result: ' + hand[res]) img = cv2ImgAddText(frame, hand[res], 250, 30, (0, 255, 0), 30) elif res == "Rock": # rock print('Recognition result: ' + hand[res]) img = cv2ImgAddText(frame, hand[res], 250, 30, (0, 255, 0), 30) elif res == "Congratulation": # Make a bow print('Recognition result: ' + hand[res]) img = cv2ImgAddText(frame, hand[res], 250, 30, (0, 255, 0), 30) elif res == "Seven": # Number 7 print('Recognition result: ' + hand[res]) img = cv2ImgAddText(frame, hand[res], 250, 30, (0, 255, 0), 30) elif res == "Thumb_down": # Thumb down print('Recognition result: ' + hand[res]) img = cv2ImgAddText(frame, hand[res], 250, 30, (0, 255, 0), 30) else: img = frame image_widget.value = bgr8_to_jpeg(img)except KeyboardInterrupt: print("Program closed! ") passAfter the code block is run, you can see the recognition screen. Note, if you cannot identify Baidu, apply for your own secret key.