Qwen2 model

Qwen2 modelModel scalePerformance performanceGot Qwen2Use Qwen2Run Qwen2DialogueEnd conversationReference material

Demonstration environment

**Development Board * *: Raspberry Pi 5B

SD(TF)card:64G(Above 16G, the larger the capacity, the more models can be experienced)

xxxxxxxxxxRaspberry Pi 5B (8G RAM): Run 8B and below parameter modelsRaspberry Pi 5B (4G RAM): Run 3B and below parameter models

Model scale

| Model | 参数 |

|---|---|

| Qwen2 | 0.5B |

| Qwen2 | 1.5B |

| Qwen2 | 7B |

| Qwen2 | 72B |

xRaspberry Pi 5B (8G RAM): Test Qwen2 model with parameters of 7B and below.Raspberry Pi 5B (4G RAM): Test Qwen2 model with parameters of 1.5B and below.

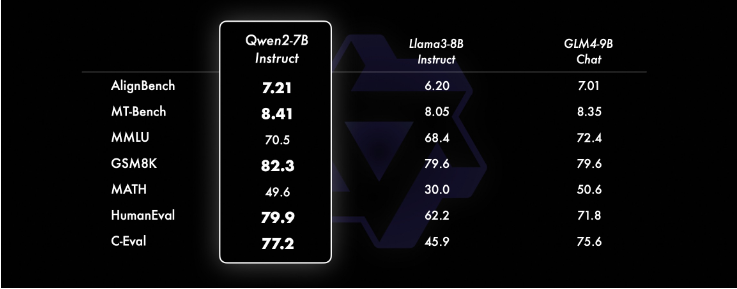

Performance performance

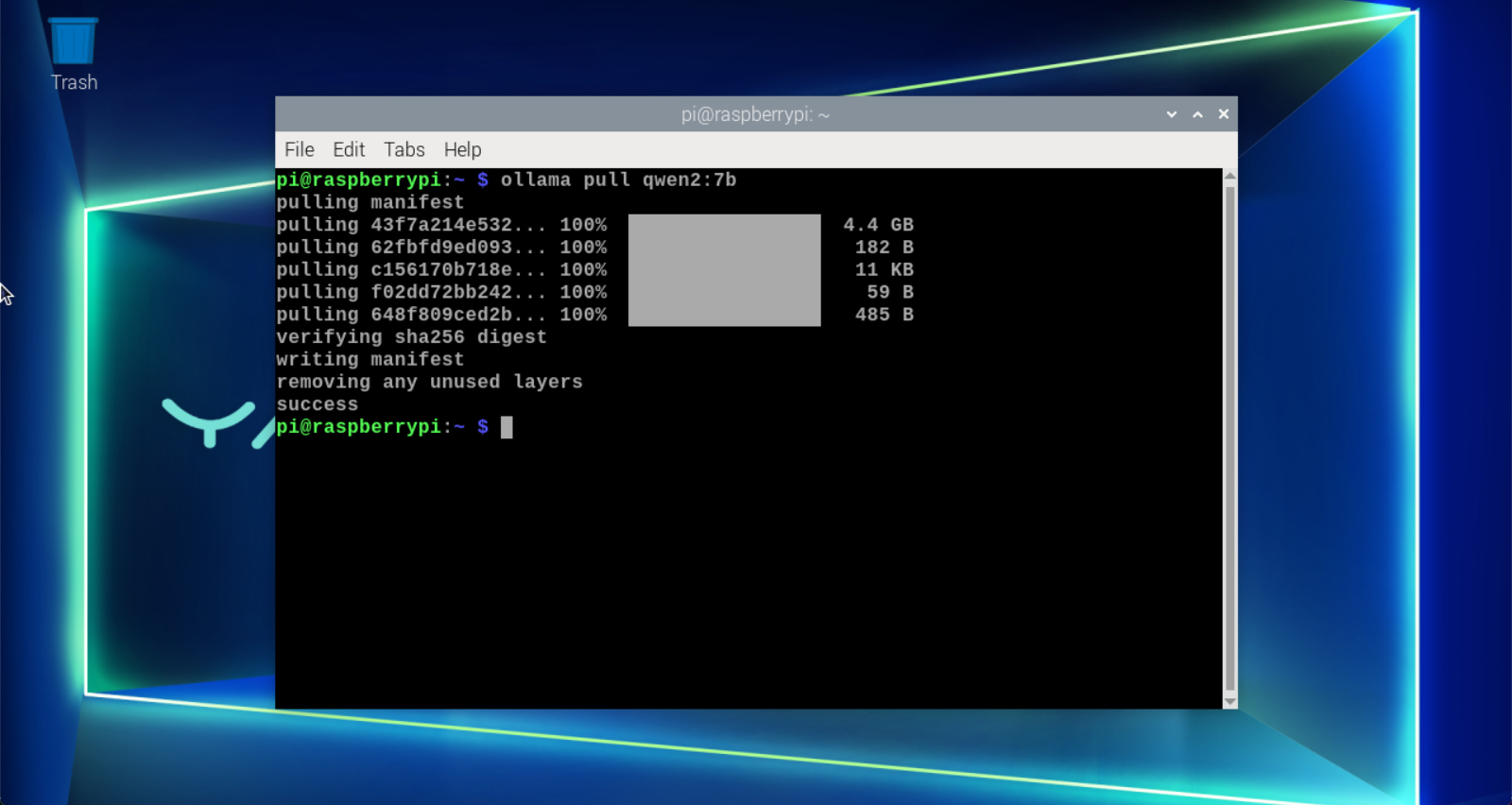

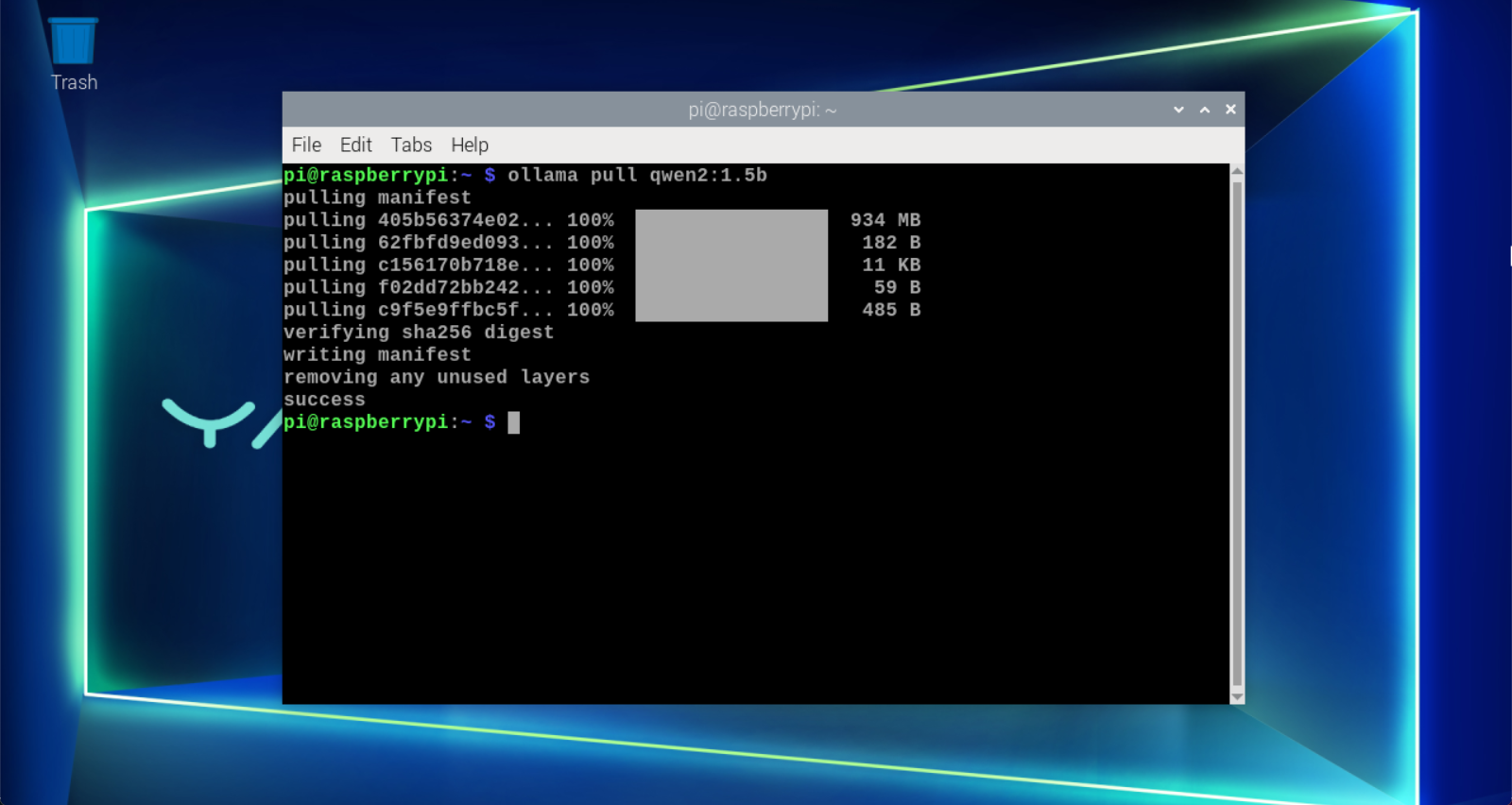

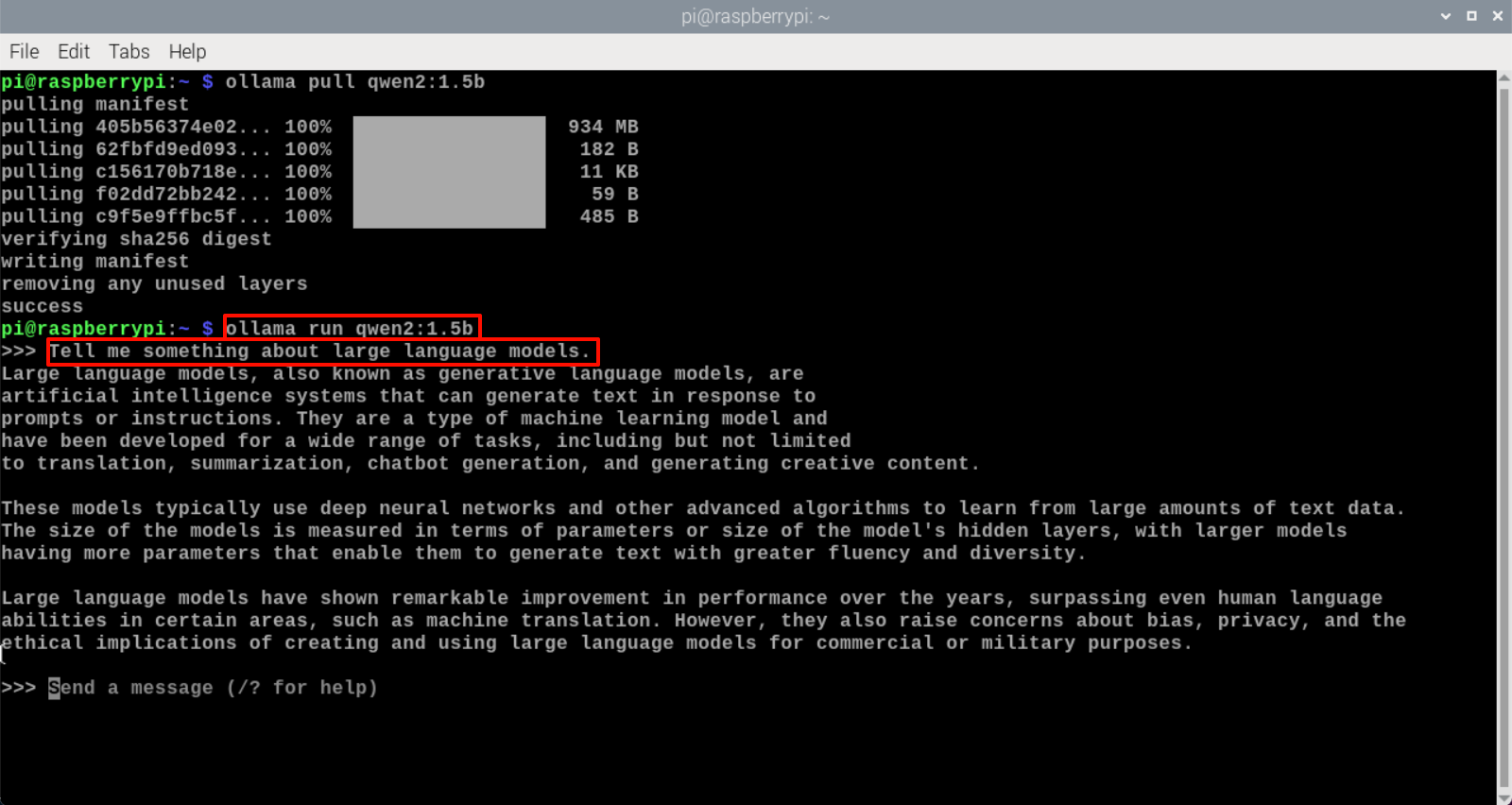

Got Qwen2

Using the pull command will automatically pull the models from the Ollama model library.

Raspberry Pi 5B(8G RAM)

xxxxxxxxxxollama pull qwen2:7b

Raspberry Pi 5B(4G RAM)

xxxxxxxxxxollama pull qwen2:1.5b

Use Qwen2

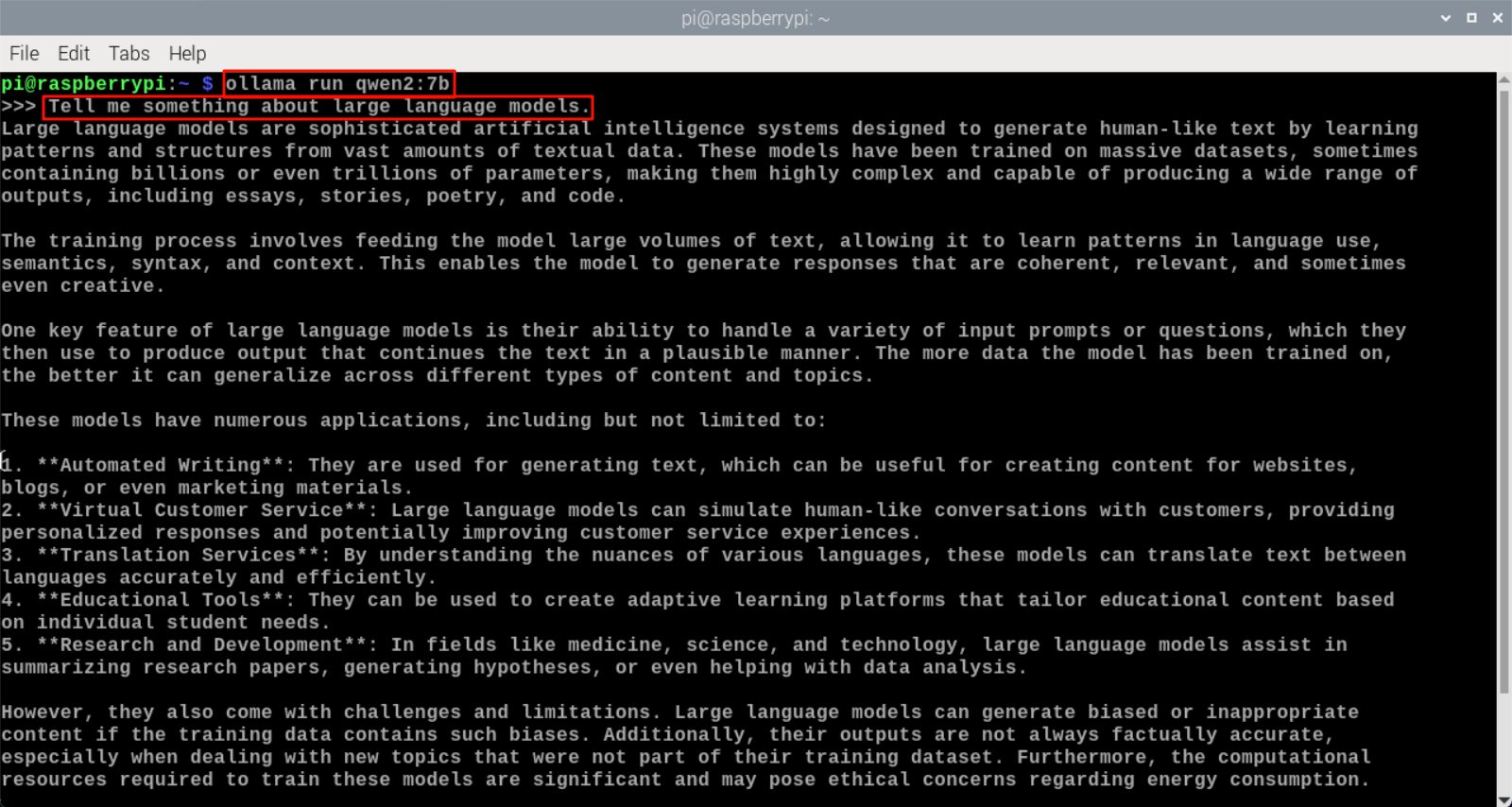

Run Qwen2

Raspberry Pi 5B(8G RAM)

If the system does not have a running model, the system will automatically pull the Qwen2 7B model and run it.

xxxxxxxxxxollama run qwen2:7b

Raspberry Pi 5B(4G RAM)

If the system does not have a running model, the system will automatically pull the Qwen2 1.5B model and run it.

xxxxxxxxxxollama run qwen2:1.5b

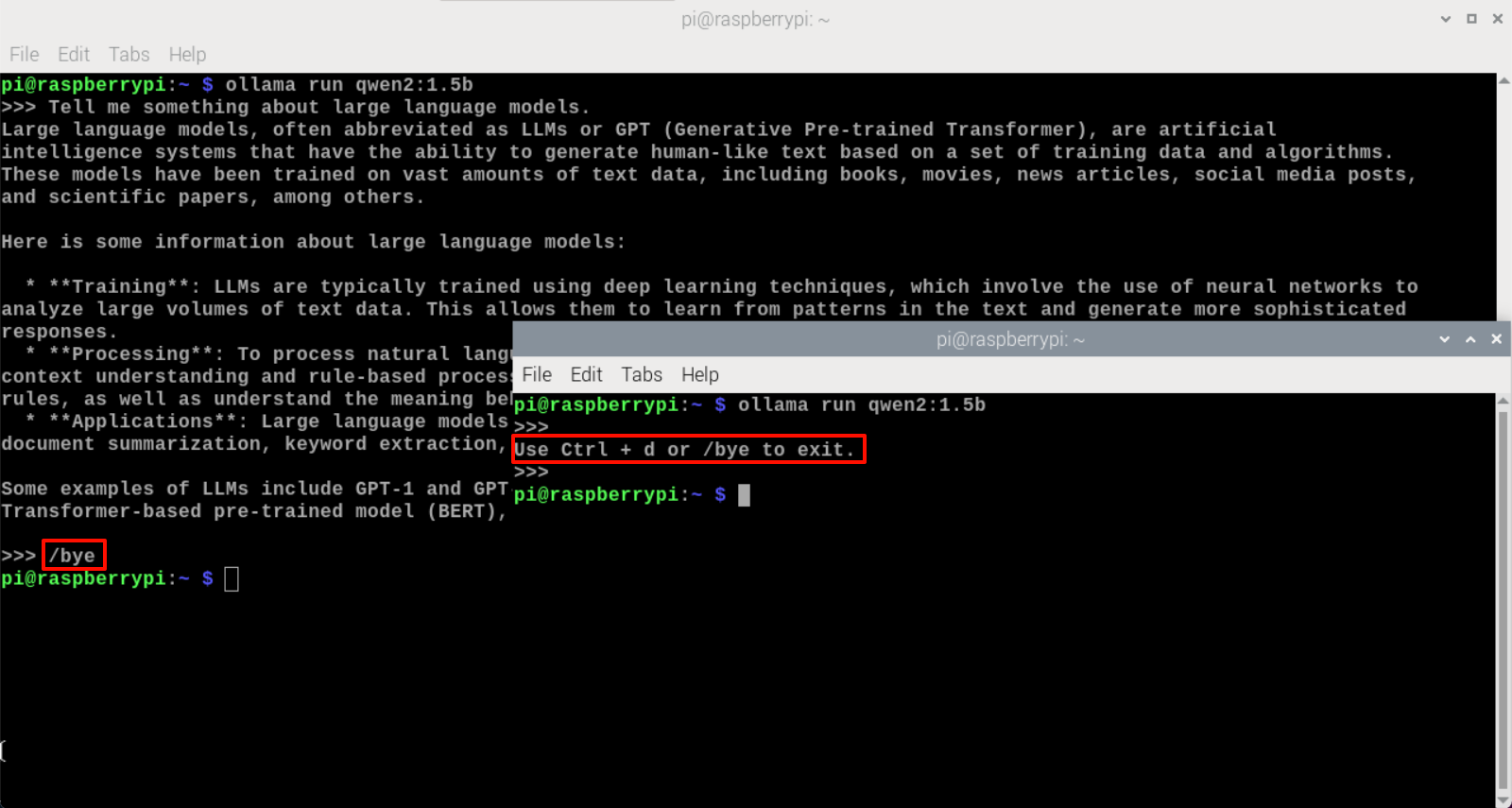

Dialogue

Raspberry Pi 5B(8G RAM)

xxxxxxxxxxTell me something about large language models.

The response time to the question is related to the hardware configuration, please be patient and wait.

Raspberry Pi 5B(4G RAM)

xxxxxxxxxxTell me something about large language models.

The response time to the question is related to the hardware configuration, please be patient and wait.

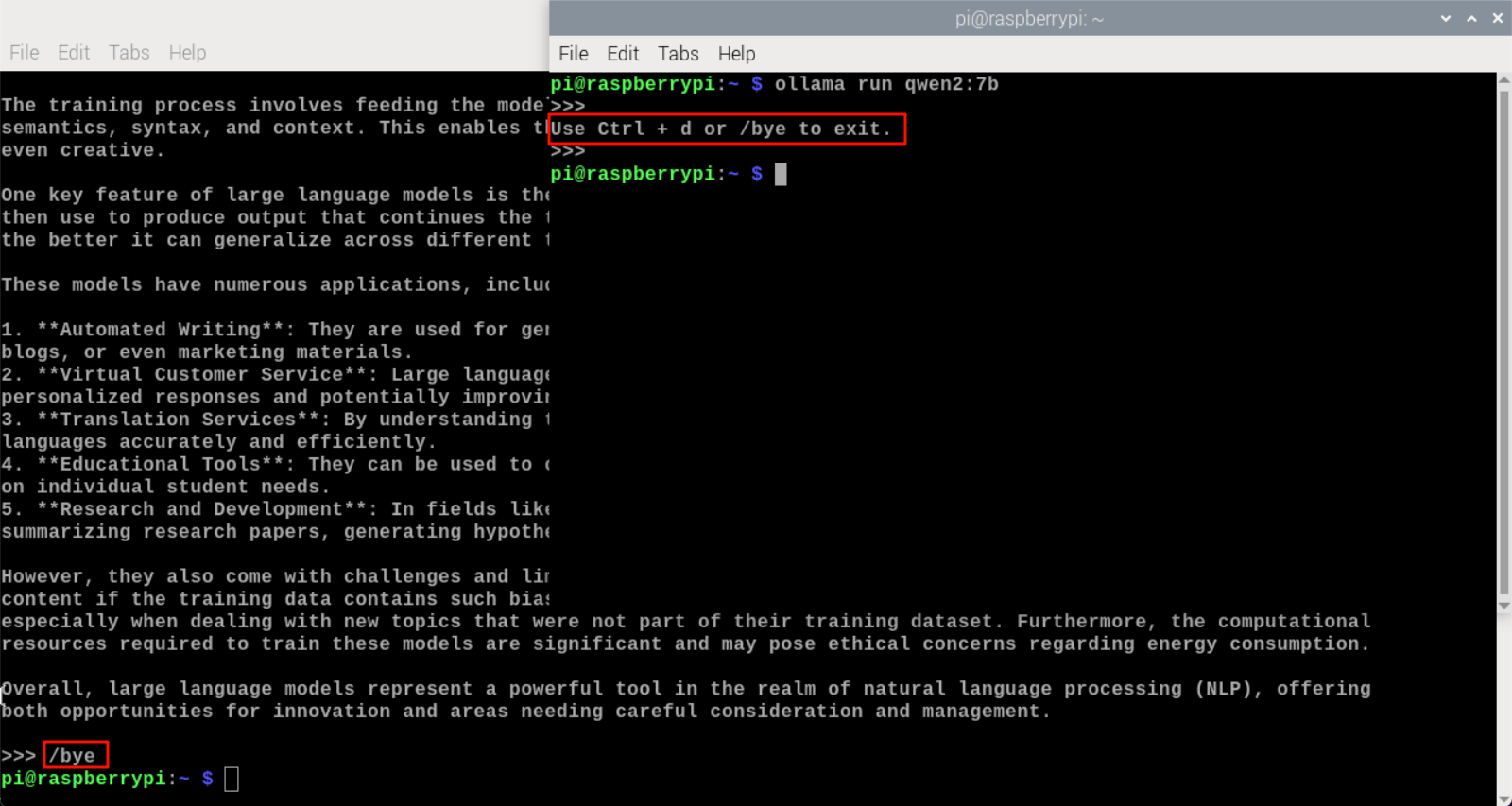

End conversation

You can end the conversation by using the shortcut key 'Ctrl+d' or '/bye'.

Raspberry Pi 5B(8G RAM)

Raspberry Pi 5B(4G RAM)

Reference material

Ollama

Website:https://ollama.com/

GitHub:https://github.com/ollama/ollama

Qwen2

GitHub:https://github.com/QwenLM/Qwen2

Ollama model:https://ollama.com/library/qwen2