Garbage sorting

1. Garbage sorting instructions

The file path for robot arm position calibration is ~/jetcobot_ws/src/jetcobot_color_identify/scripts/XYT_config.txt.

After calibration, re-start the program and click the calibration mode to automatically read the file information, reducing repeated calibration actions.

2. About code

Code path:~/jetcobot_ws/src/jetcobot_garbage_yolov5/Garbage_sorting.ipynb

~/jetcobot_ws/src/jetcobot_utils/src/jetcobot_utils/grasp_controller.py

Since the camera may have deviations in the position of the building block, it is necessary to add deviation parameters to adjust the deviation value of the robotic arm to the recognition area.

The type corresponding to garbage sorting and garbage stacking is "garbage", so it is necessary to change the offset parameter under type == "garbage". The X offset controls the front and back offset, and the Y offset controls the left and right offset.

x# Get the XY offset according to the task typedef grasp_get_offset_xy(self, task, type):offset_x = -0.012offset_y = 0.0005if type == "garbage":offset_x = -0.012offset_y = 0.002elif type == "apriltag":offset_x = -0.012offset_y = 0.0005elif type == "color":offset_x = -0.012offset_y = 0.0005return offset_x, offset_y

The coordinates of the garbage area.

If the coordinates of the garbage area are inaccurate, you can modify them appropriately.

x# Recyclable garbage locationdef goRecyclablePose(self, layer=1):layer = self.limit_garbage_layer(layer)coords = [-50, -230, 110 + int(layer-1)*40, -180, -2, -43]self.go_coords(coords, 3)# Hazardous waste locationdef goHazardousWastePose(self, layer=1):layer = self.limit_garbage_layer(layer)coords = [20, -230, 110 + int(layer-1)*40, -180, -2, -43]self.go_coords(coords, 3)# Food waste locationdef goFoodWastePose(self, layer=1):layer = self.limit_garbage_layer(layer)coords = [80, -230, 110 + int(layer-1)*40, -180, -2, -43]self.go_coords(coords, 3)# Other garbage locationsdef goResidualWastePose(self, layer=1):layer = self.limit_garbage_layer(layer)coords = [145, -230, 120 + int(layer-1)*40, -180, -2, -43]self.go_coords(coords, 3)

3. About code

Start roscore

- If you are using Jetson Orin NX/Jetson Orin Nano board. You need to enter the Docker environment using the following command.

- Then, run roscore

xxxxxxxxxxsh ~/start_docker.shroscore

- If you are using Jetson Nano board. You need to enter the following command directly.

xxxxxxxxxxroscore

Start the program

Open the jupyterlab webpage and find the corresponding .ipynb program file.

Click the Run button to run the entire code.

4. Experimental operation and results

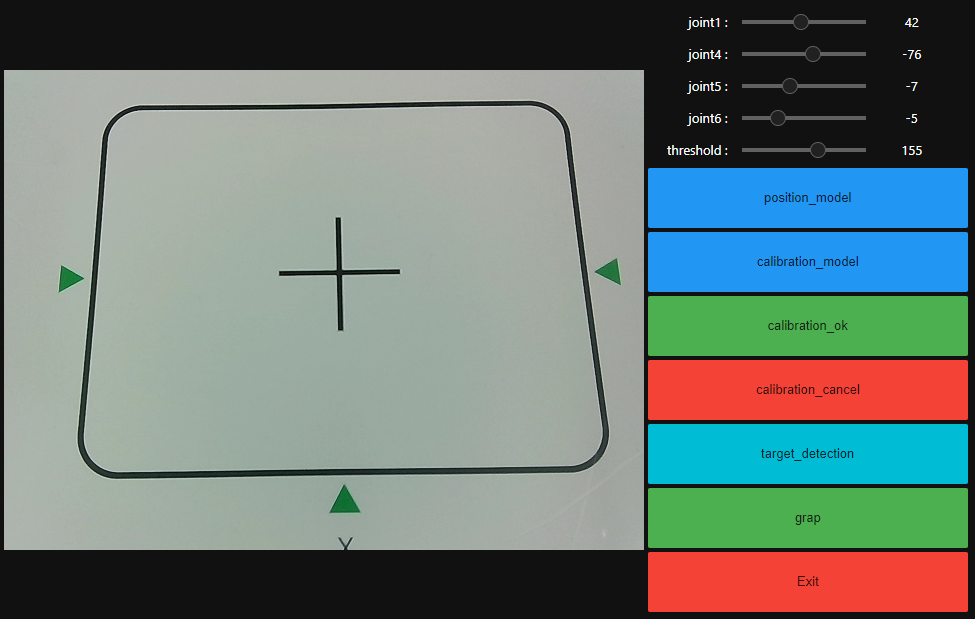

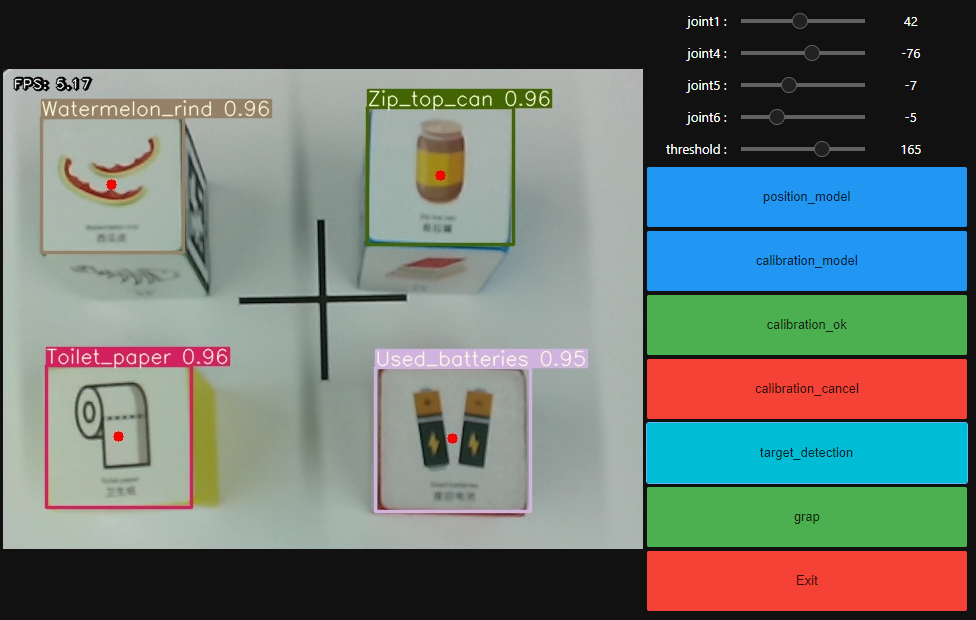

After the program runs, the Jupyterlab webpage will display the control button.

Camera image on the left side, related buttons on the right side.

Click the 【position_model】button, drag the joint angle above, update the position of the robot arm, and make the recognition area in the middle of the entire image.

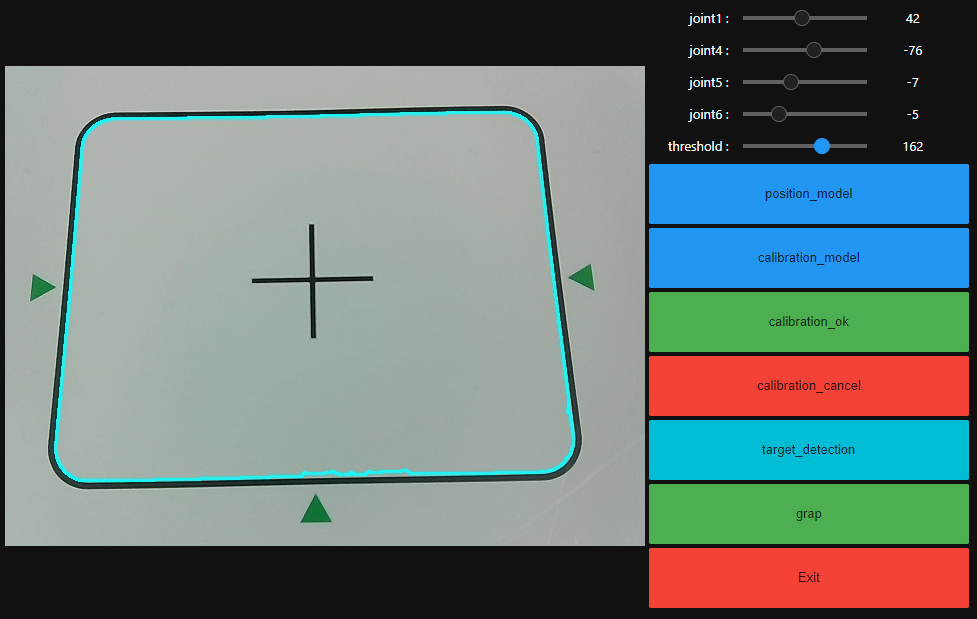

Then, click 【calibration_model】 to enter the calibration mode, and adjust the robot arm joint slider and threshold slider above to make the displayed blue line overlap with the black line of the recognition area.

Click 【calibration_ok】 to calibrate OK.

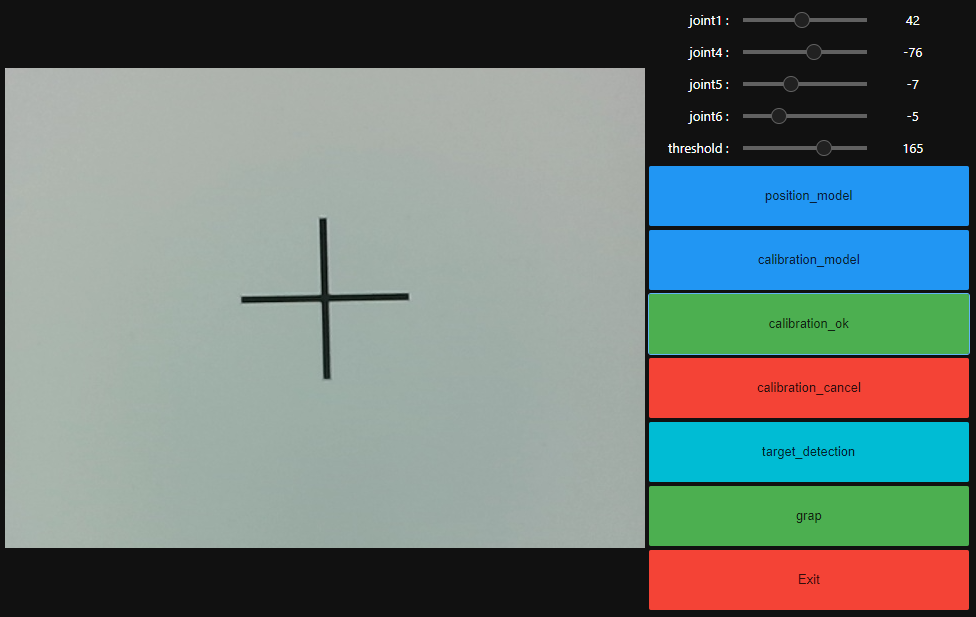

The camera screen will switch to the recognition area perspective.

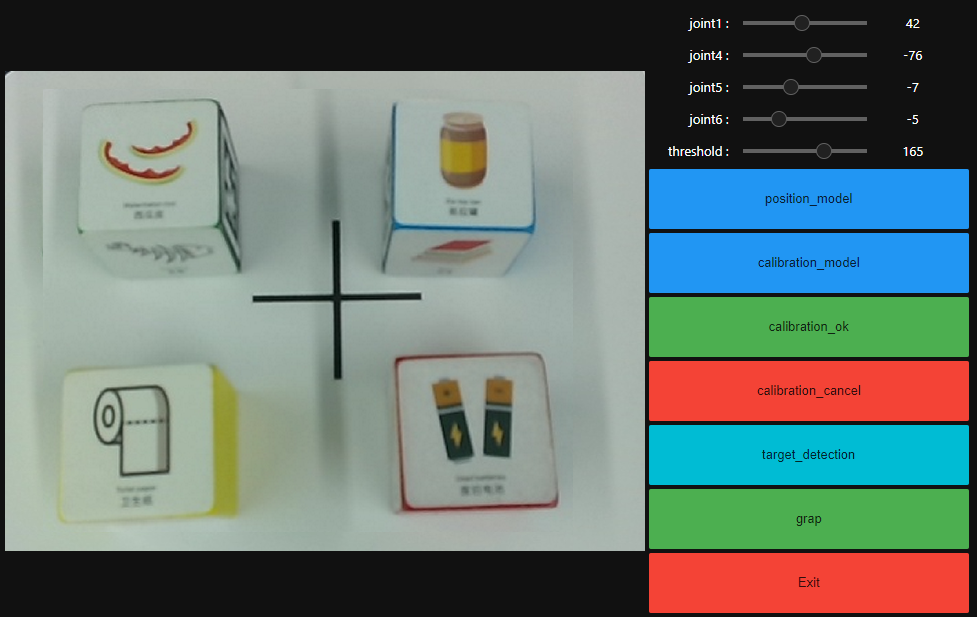

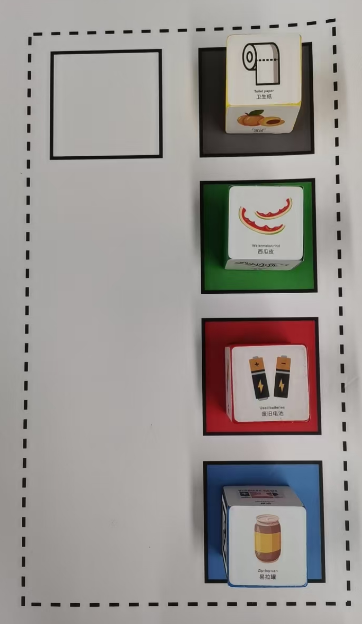

Place the block with the garbage category facing up into the recognition area.

Note: The view of the garbage image from the camera screen must be positive direction, not reversed.

Click [target_detection] and wait for the model file to be loaded. After the model file is loaded, start identifying garbage names.

Then click the [grap] button to start sorting.

The system will identify the garbage name and grab the building blocks into the corresponding garbage area according to the category.

If you need to exit the program, please click the 【Exit】 button.