3. Basic use of PyTorch

Raspberry Pi motherboard series does not currently support PyTorch functions.

3.1. About PyTorch

3.1.1. Introduction

PyTorch is an open source Python machine learning library based on Torch for applications such as natural language processing.

3.1.2, Features

1), Powerful GPU-accelerated tensor calculation

2), Deep neural network of automatic differentiation system

3), Dynamic graph mechanism

3.2, Tensors in PyTorch

3.2.1, Tensors

Tensors are called Tensors in English. They are the basic computing units in PyTorch. They represent a multi-dimensional matrix just like Numpy's ndarray. The biggest difference from ndarray is that PyTorch's Tensor can run on GPU, while Numpy's ndarray can only run on CPU. Running on GPU greatly speeds up the computing speed.

3.2.2, create tensors

1), there are many ways to create tensors, calling different API interfaces can create different types of tensors,

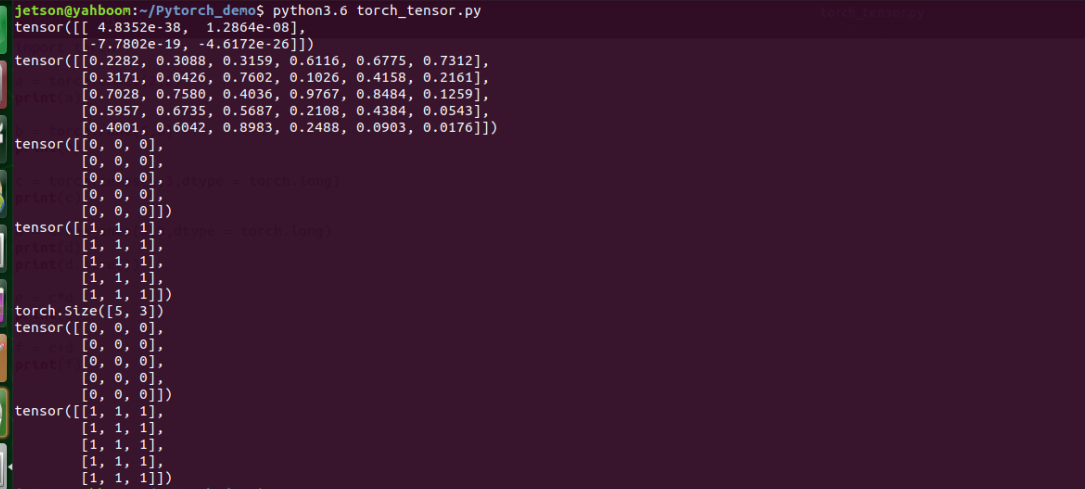

a = torch.empty(2,2): create an uninitialized 2*2 tensor

b = torch.rand(5, 6): create a uniformly distributed initialized tensor with each element from 0-1

c = torch.zeros(5, 5, dtype=torch.long): create an initialized all-zero tensor and specify the type of each element as long

d = c.new_ones(5, 3, dtype=torch.double): create a new tensor d based on the known tensor c

d.size(): get the shape of tensor d

2), operations between tensors

The operations between tensors are actually operations between matrices. Due to the dynamic graph mechanism, mathematical calculations can be performed directly on tensors, for example,

- Add two tensors:

xxxxxxxxxxc = torch.zeros(5,3,dtype=torch.long)d = torch.ones(5,3,dtype=torch.long)e = c + dprint(e)

- Multiply two tensors

xxxxxxxxxxc = torch.zeros(5,3,dtype=torch.long)d = torch.ones(5,3,dtype=torch.long)e = c * dprint(e)

For this part of the code, please refer to: /home/yahboom/YBAMR-COBOT-EDU-00001/src/yahboom_navrobo_other/Pytorch/torch_tensor.py

Run the code,

xxxxxxxxxxpython torch_tensor.py

3.3, torchvision package introduction

3.3.1, torchvision is a library in Pytorch specifically used to process images, which contains four major categories:

1), torchvision.datasets: load datasets, Pytorch has many datasets such as CIFAR, MNIST, etc., you can use this class to load datasets, the usage is as follows:

xxxxxxxxxxcifar_train_data = torchvision.datasets.CIFAR10(root='./data', train=True, download=False, transform=transform)2), torchvision.models: load trained models, including the following VCG, ResNet, etc., the usage is as follows:

xxxxxxxxxximport torchvision.models as modelsresnet18 = models.resnet18()3), torchvision.transforms: Class for image conversion operations, usage is as follows:

xxxxxxxxxxtransform = transforms.Compose( [transforms.ToTensor(), transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])4), torchvision.untils: Arrange the images into a grid shape, usage is as follows:

xxxxxxxxxxtorchvision.utils.make_grid(tensor, nrow=8, padding=2, normalize=False, range=None, scale_each=False, pad_value=0)For more information about the use of the torchvision package, please refer to the official website documentation: https://pytorch.org/vision/0.8/datasets.html

3.4, Convolutional Neural Network

3.4.1, Neural Network

1), Difference between Neural Network and Machine Learning

Neural Network and Machine Learning are both used for classification tasks. The difference is that Neural Network is more efficient than Machine Learning, the data is simpler, and fewer parameters are required to perform tasks. The following points are explained:

- Efficiency: The efficiency of neural network is reflected in the extraction of features. It is different from the features of machine learning. It can be trained and self-"corrected". We only need to input data into it, and it will continuously update the features.

- Data simplicity: In the process of machine learning, we need to process the data before inputting it, such as normalization, format conversion, etc., but in neural network, too much processing is not required.

- Fewer parameters for performing tasks: In machine learning, we need to adjust the penalty factor, slack variable, etc. to adjust to the most suitable effect, but for neural network, only a weight w and bias term b are given. These two values will be constantly corrected during the training process and adjusted to the optimal value to minimize the error of the model.

3.4.2, Convolutional Neural Network

1), Convolution Kernel

Convolution kernel can be understood as feature extractor, filter (digital signal processing), etc. Neural network has three layers (input layer, hidden layer, output layer), neurons in each layer can share convolution kernel, so it is very convenient to process high-order data. We only need to design the size, number and sliding step of convolution kernel to let it train itself.

2), Three basic layers of convolutional neural network:

- Convolution layer

Perform convolution operation, inner product operation of two matrices of convolution kernel size, multiply and add numbers in the same position. Convolution layer close to the input layer sets a small number of convolution kernels, and the more convolution kernels are set in the later layer, the more convolution kernels are set.

- Pooling layer

Compress images and parameters by downsampling, but will not damage the quality of the image. There are two pooling methods, MaxPooling (that is, taking the largest value in the sliding window) and AveragePooling (taking the average of all values in the sliding window).

- Flatten layer & Fully Connected layer

This layer is mainly a stacking layer. After the pooling layer, the image is compressed and then enters the Flatten layer; the output of the Flatten layer is placed in the Fully Connected layer and classified using softmax.

3.5. Build LetNet neural network and train data set

3.5.1. Preparation before implementation

1) Environment

ROSMASTER-jetson development board series are all installed with the development environment of this project, including:

- python 3.6+

- torch 1.8.0

- torchvision 0.9.0

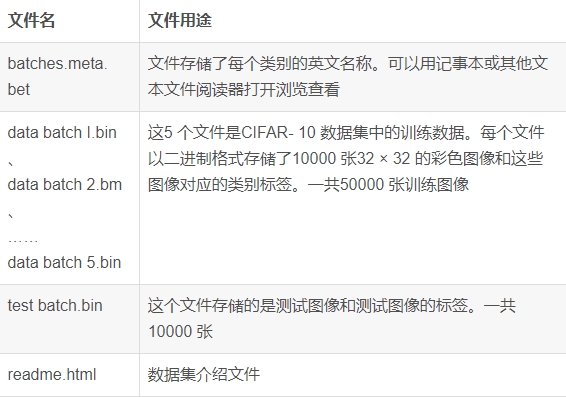

2) Dataset

CIFAR-10, 50,000 training images of 32*32 size and 10,000 test images

Note: The data set is saved in the ~/Pytorch_demo/data/cifar-10-batches-py directory,

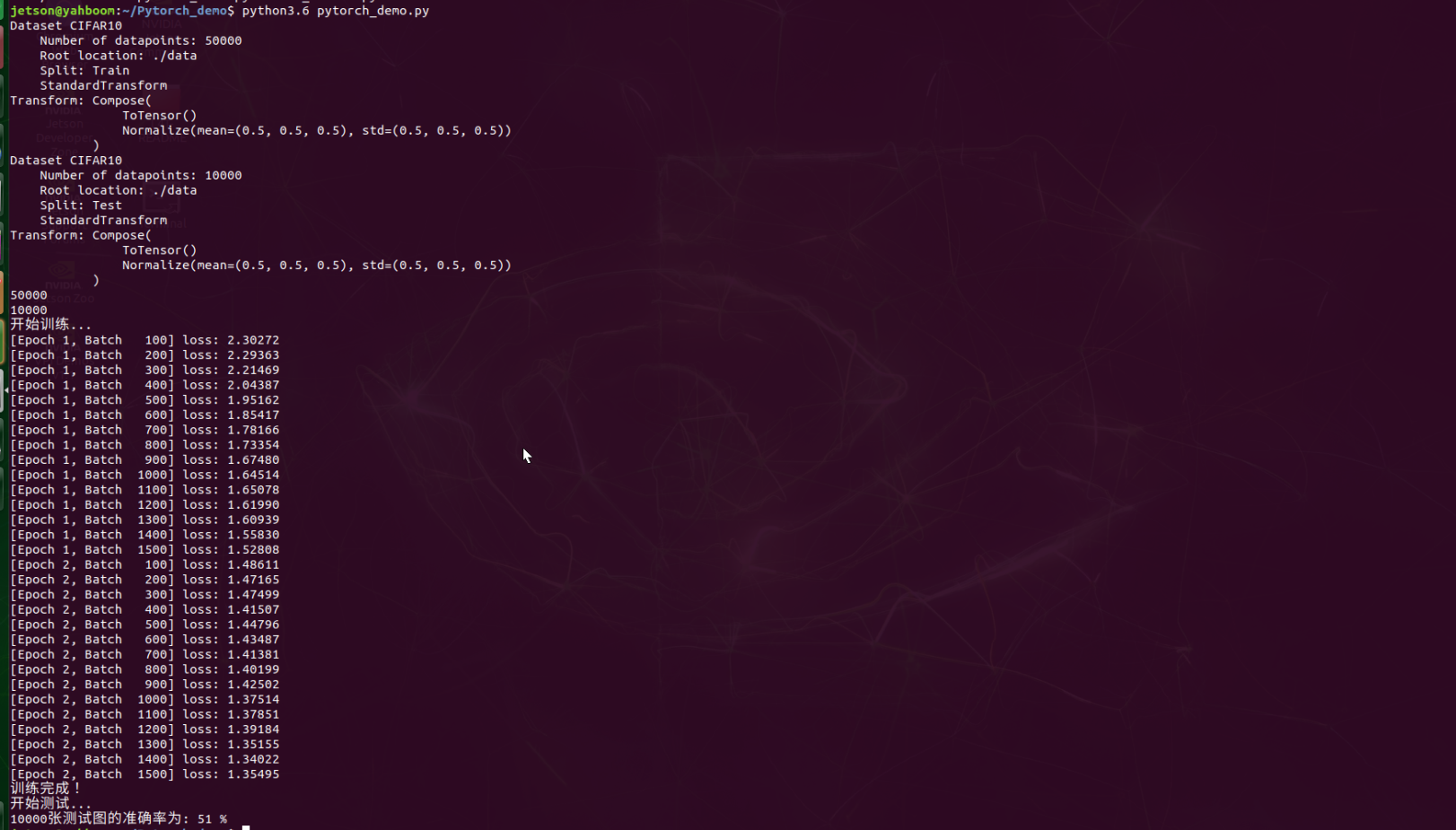

3.5.2. Implementation process

1) Import related modules

xxxxxxxxxximport torchimport torch.nn as nnimport torch.nn.functional as Fimport torchvisionimport torchvision.transforms as transformsimport torch.optim as optim2) load the data set

xxxxxxxxxxcifar_train_data = torchvision.datasets.CIFAR10(root='./data', train=True, download=False, transform=transform)cifar_test_data = torchvision.datasets.CIFAR10(root='./data', train=False, transform=transform)3)encapsulating the data set

xxxxxxxxxxtrain_data_loader = torch.utils.data.DataLoader(cifar_train_data, batch_size=32, shuffle=True)test_data_loader = torch.utils.data.DataLoader(cifar_test_data, batch_size=32, shuffle=True4) Build a convolutional neural network

xxxxxxxxxxclass LeNet(nn.Module): #Define the operators required by the network, such as convolution, fully connected operators, etc. def __init__(self): super(LeNet, self).__init__() #Conv2d parameter meaning: number of input channels, number of output channels, kernel size self.conv1 = nn.Conv2d(3, 6, 5) self.conv2 = nn.Conv2d(6, 16, 5) self.fc1 = nn.Linear(16*5*5, 120) self.fc2 = nn.Linear(120, 84) self.fc3 = nn.Linear(84, 10) self.pool = nn.MaxPool2d(2, 2) def forward(self, x): x = F.relu(self.conv1(x)) x = self.pool(x) x = F.relu(self.conv2(x)) x = self.pool(x) x = x.view(-1, 16*5*5) x = F.relu(self.fc1(x)) x = F.relu(self.fc2(x)) x = self.fc3(x) return x5) Configure the loss function and optimizer for training

xxxxxxxxxxcriterion = nn.CrossEntropyLoss()optimizer = optim.SGD(net.parameters(), lr=0.005, momentum=0.9)6) Start training and testing

3.5.3 Run the program

1) Reference code path

xxxxxxxxxx/home/yahboom/YBAMR-COBOT-EDU-00001/src/yahboom_navrobo_other/Pytorch/pytorch_demo.py

2) Run the program

xxxxxxxxxxcd /home/yahboom/YBAMR-COBOT-EDU-00001/src/yahboom_navrobo_other/Pytorchpython pytorch_demo.py

We only trained twice here. You can modify the epoch value to modify the number of training times. The more training times, the higher the accuracy.