Ollama

OllamaLarge Language Model (LLM)Ollama installationScript installationOllama UsageOllama uninstallReferences

Demonstration environment

Development board: Jetson Orin series motherboard

SSD: 128G

Tutorial scope

Development board model: Jetson series, Raspberry Pi 5

Ollama is an open source tool that aims to simplify the deployment and operation of large language models, allowing users to use high-quality language models in a local environment.

Large Language Model (LLM)

Large Language Models (LLM) is a type of advanced text generation system based on artificial intelligence technology. Its main feature is that it can learn and understand human language through large-scale training data and can generate natural and fluent text.

Ollama installation

The tutorial demonstrates the use of scripts to install Ollama on the Jetson Orin series motherboard.

Script installation

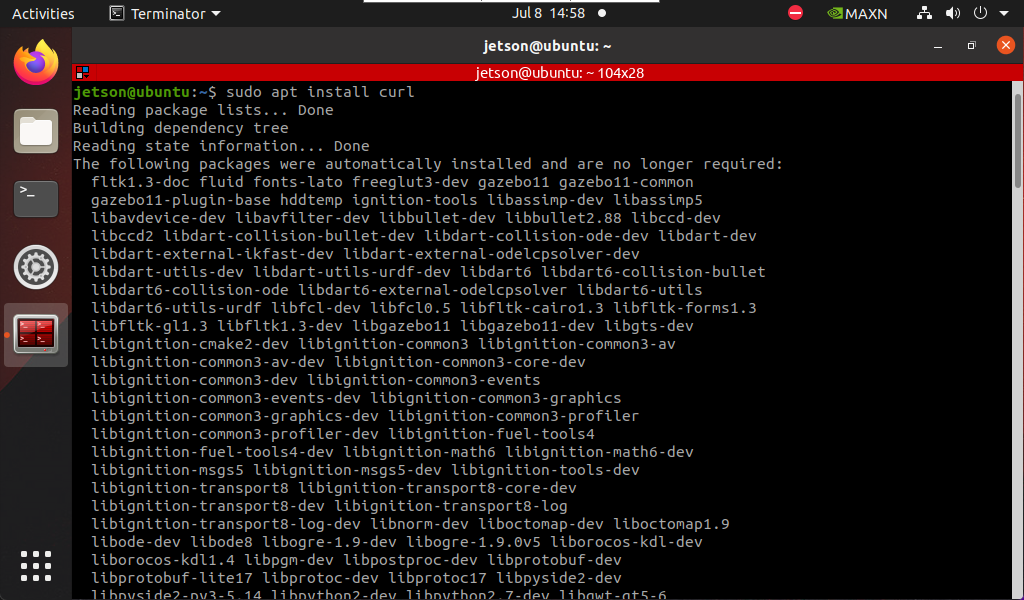

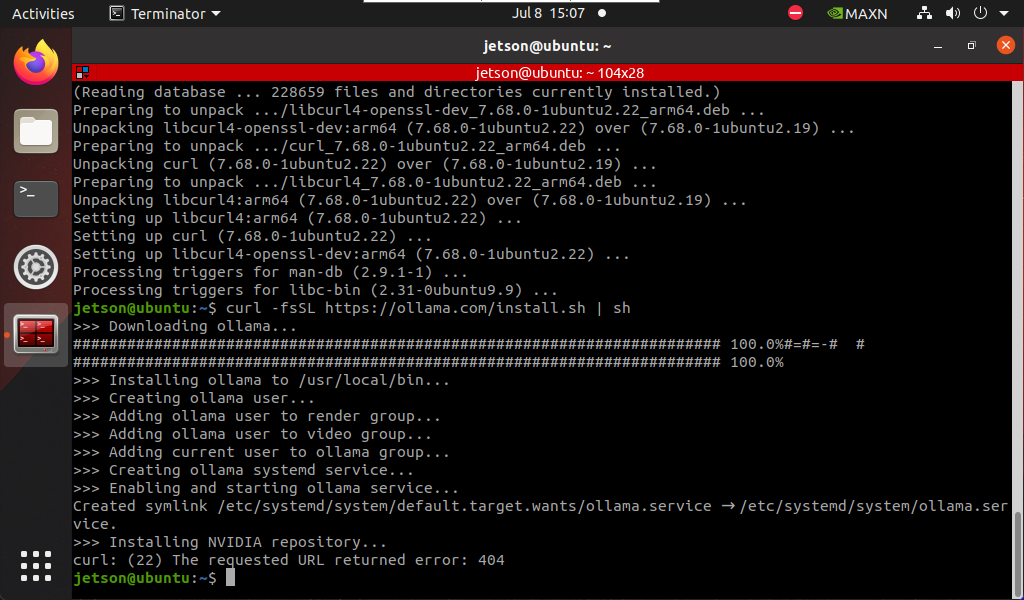

xxxxxxxxxxsudo apt install curlcurl -fsSL https://ollama.com/install.sh | sh

The entire installation process takes a long time, please wait patiently!

xxxxxxxxxxThe prompt curl: (22) The requested URL returned error: 404 appears, which can be ignored!

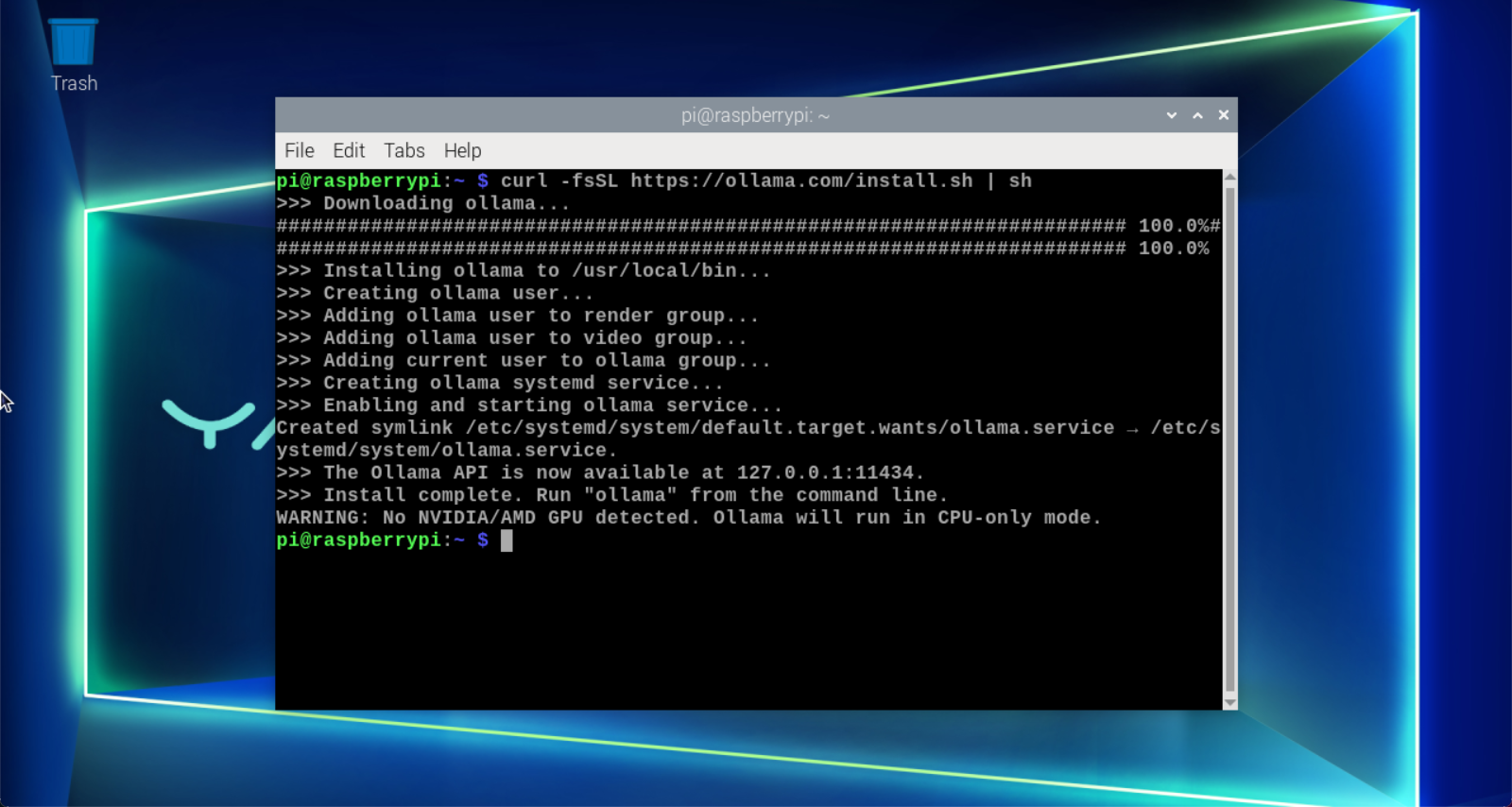

Raspberry Pi 5 The motherboard installation steps are the same.

The Raspberry Pi terminal displays "Install complete", indicating that Ollama has been successfully installed!

xxxxxxxxxxThe warning is that NVIDIA/AMD GPU is not detected, and Ollama will run in CPU mode. We can ignore this prompt directly.

Ollama Usage

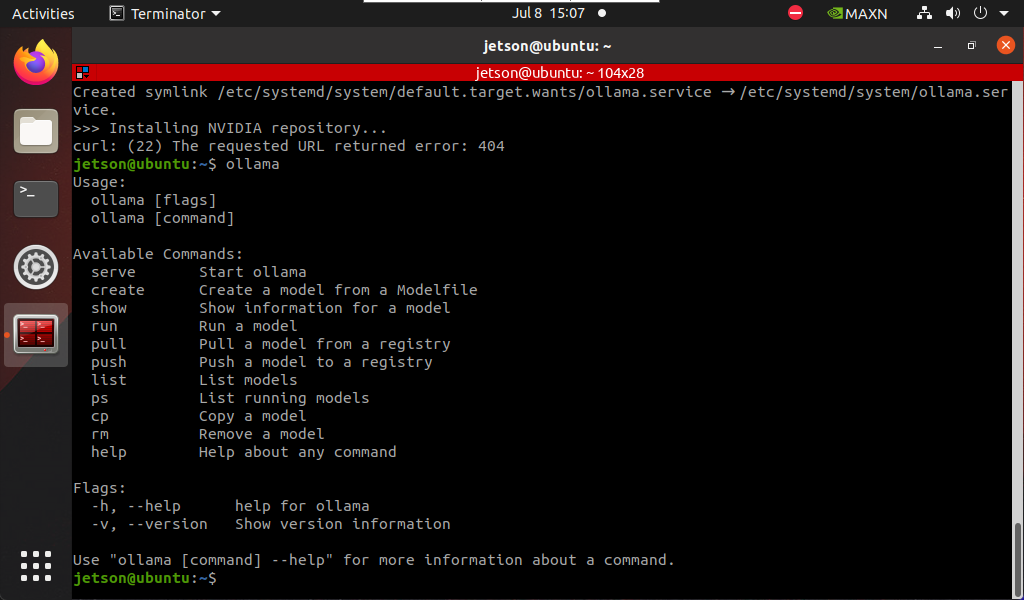

You can see the prompt by typing ollama in the terminal:

| Command | Function |

|---|---|

| ollama serve | Start ollama |

| ollama create | Create a model from a model file |

| ollama show | Display model information |

| ollama run | Run the model |

| ollama pull | Pull the model from the registry |

| ollama push | Push the model to the registry |

| ollama list | List the models |

| ollama ps | List the running models |

| ollama cp | Copy the model |

| ollama rm | Delete the model |

| ollama help | Get help information about any command |

| ollama -v | View ollama version number |

Ollama uninstall

- Delete service

xxxxxxxxxxsudo systemctl stop ollamasudo systemctl disable ollamasudo rm /etc/systemd/system/ollama.service

- Delete file

xxxxxxxxxxsudo rm $(which ollama)

- Delete model and service user and group

xxxxxxxxxxsudo rm -r /usr/share/ollamasudo userdel ollamasudo groupdel ollama

References

Ollama

Official website: https://ollama.com/

GitHub: https://github.com/ollama/ollama