Object Detection

Object Detection1. Model Introduction2. Target Prediction: ImageEffect preview3. Target prediction: videoEffect preview4. Target prediction: real-time detection4.1. USB cameraEffect preview4.2, CSI cameraEffect previewReferences

Use Python to demonstrate the effects of Ultralytics: Object Detection in images, videos, and real-time detection.

1. Model Introduction

Object detection is a task that involves identifying the location and category of objects in an image or video stream.

The output of an object detector is a set of bounding boxes that surround objects in an image, as well as the class label and confidence score for each bounding box. If you need to identify objects of interest in a scene, but do not need to know the specific location or exact shape of the object, then object detection is a good choice.

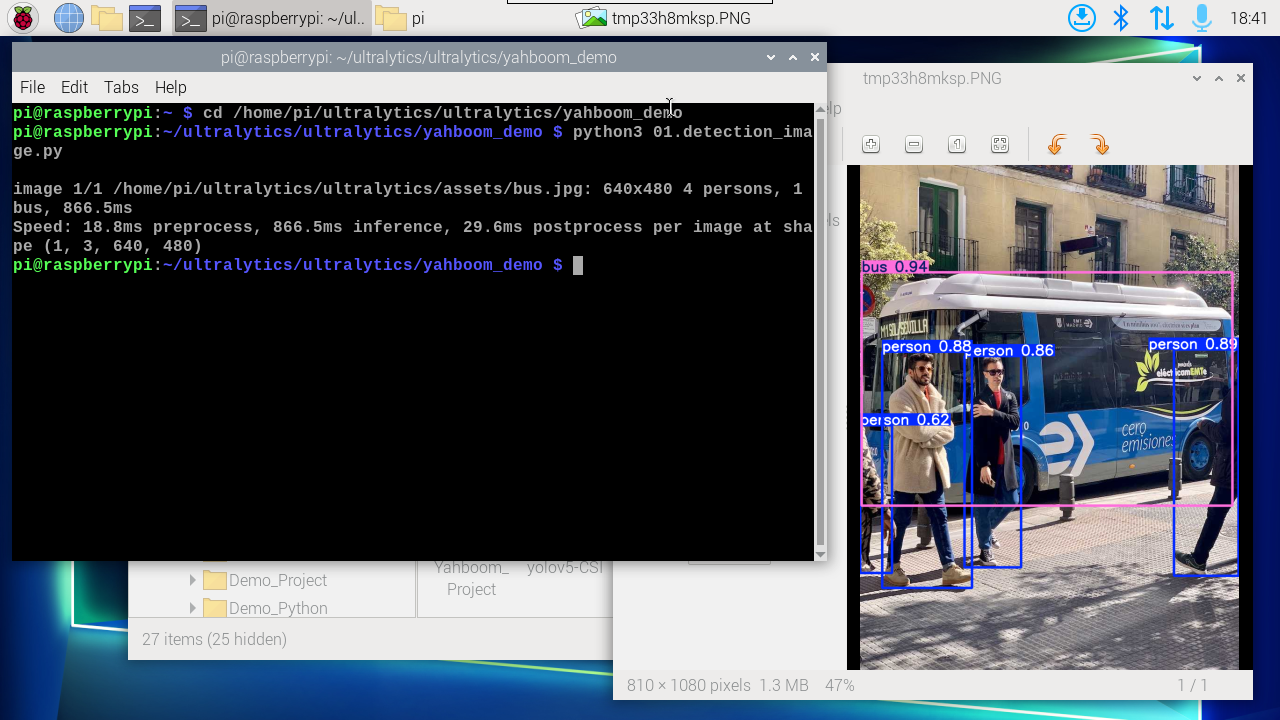

2. Target Prediction: Image

Use yolo11n.pt to predict the pictures that come with the ultralytics project.

Enter the code folder:

cd /home/pi/ultralytics/ultralytics/yahboom_demo

Run the code:

xxxxxxxxxxpython3 01.detection_image.py

Effect preview

Yolo recognition output image location: /home/pi/ultralytics/ultralytics/output/

Sample code:

xfrom ultralytics import YOLO# Load a modelmodel = YOLO("/home/pi/ultralytics/ultralytics/yolo11n.pt")# Run batched inference on a list of imagesresults = model("/home/pi/ultralytics/ultralytics/assets/bus.jpg") # return a list of Results objects# Process results listfor result in results: boxes = result.boxes # Boxes object for bounding box outputs # masks = result.masks # Masks object for segmentation masks outputs # keypoints = result.keypoints # Keypoints object for pose outputs # probs = result.probs # Probs object for classification outputs # obb = result.obb # Oriented boxes object for OBB outputs result.show() # display to screen result.save(filename="/home/pi/ultralytics/ultralytics/output/bus_output.jpg") # save to disk3. Target prediction: video

Use yolo11n.pt to predict the video under the ultralytics project (not the video that comes with ultralytics).

Enter the code folder:

xxxxxxxxxxcd /home/pi/ultralytics/ultralytics/yahboom_demo

Run the code:

xxxxxxxxxxpython3 01.detection_video.py

Effect preview

Video location of yolo recognition output: /home/pi/ultralytics/ultralytics/output/

Sample code:

xxxxxxxxxximport cv2from ultralytics import YOLO# Load the YOLO modelmodel = YOLO("/home/pi/ultralytics/ultralytics/yolo11n.pt")# Open the video filevideo_path = "/home/pi/ultralytics/ultralytics/videos/people_animals.mp4"cap = cv2.VideoCapture(video_path)# Get the video frame size and frame rateframe_width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))frame_height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))fps = int(cap.get(cv2.CAP_PROP_FPS))# Define the codec and create a VideoWriter object to output the processed videooutput_path = "/home/pi/ultralytics/ultralytics/output/01.people_animals_output.mp4"fourcc = cv2.VideoWriter_fourcc(*'mp4v') # You can use 'XVID' or 'mp4v' depending on your platformout = cv2.VideoWriter(output_path, fourcc, fps, (frame_width, frame_height))# Loop through the video frameswhile cap.isOpened(): # Read a frame from the video success, frame = cap.read() if success: # Run YOLO inference on the frame results = model(frame) # Visualize the results on the frame annotated_frame = results[0].plot() # Write the annotated frame to the output video file out.write(annotated_frame) # Display the annotated frame cv2.imshow("YOLO Inference", annotated_frame) # Break the loop if 'q' is pressed if cv2.waitKey(1) & 0xFF == ord("q"): break else: # Break the loop if the end of the video is reached break# Release the video capture and writer objects, and close the display windowcap.release()out.release()cv2.destroyAllWindows()4. Target prediction: real-time detection

4.1. USB camera

Use yolo11n.pt to predict the USB camera screen.

Enter the code folder:

xxxxxxxxxxcd /home/pi/ultralytics/ultralytics/yahboom_demo

Run the code: Click the preview screen and press the q key to terminate the program!

xxxxxxxxxxpython3 01.detection_camera_usb.py

Effect preview

Yolo recognizes the output video location: /home/pi/ultralytics/ultralytics/output/

Sample code:

xxxxxxxxxximport cv2from ultralytics import YOLO# Load the YOLO modelmodel = YOLO("/home/pi/ultralytics/ultralytics/yolo11n.pt")# Open the cammeracap = cv2.VideoCapture(0)# Get the video frame size and frame rateframe_width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))frame_height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))fps = int(cap.get(cv2.CAP_PROP_FPS))# Define the codec and create a VideoWriter object to output the processed videooutput_path = "/home/pi/ultralytics/ultralytics/output/01.detection_camera_usb.mp4"fourcc = cv2.VideoWriter_fourcc(*'mp4v') # You can use 'XVID' or 'mp4v' depending on your platformout = cv2.VideoWriter(output_path, fourcc, fps, (frame_width, frame_height))# Loop through the video frameswhile cap.isOpened(): # Read a frame from the video success, frame = cap.read() if success: # Run YOLO inference on the frame results = model(frame) # Visualize the results on the frame annotated_frame = results[0].plot() # Write the annotated frame to the output video file out.write(annotated_frame) # Display the annotated frame cv2.imshow("YOLO Inference", annotated_frame) # Break the loop if 'q' is pressed if cv2.waitKey(1) & 0xFF == ord("q"): break else: # Break the loop if the end of the video is reached break# Release the video capture and writer objects, and close the display windowcap.release()out.release()cv2.destroyAllWindows()4.2, CSI camera

Use yolo11n.pt to predict the CSI camera image.

Enter the code folder:

xxxxxxxxxxcd /home/pi/ultralytics/ultralytics/yahboom_demo

Run the code: Click the preview image, press the q key to terminate the program!

xxxxxxxxxxpython3 01.detection_camera_csi.py

Effect preview

Yolo recognizes the output video location: /home/pi/ultralytics/ultralytics/output/

Sample code:

xxxxxxxxxximport cv2from picamera2 import Picamera2from ultralytics import YOLO# Initialize the Picamera2picam2 = Picamera2()picam2.preview_configuration.main.size = (640, 480)picam2.preview_configuration.main.format = "RGB888"picam2.preview_configuration.align()picam2.configure("preview")picam2.start()# Load the YOLO11 modelmodel = YOLO("/home/pi/ultralytics/ultralytics/yolo11n.pt")# Set up video outputoutput_path = "/home/pi/ultralytics/ultralytics/output/01.detection_camera_csi.mp4"fourcc = cv2.VideoWriter_fourcc(*'mp4v')out = cv2.VideoWriter(output_path, fourcc, 30, (640, 480))while True: # Capture frame-by-frame frame = picam2.capture_array() # Run YOLO11 inference on the frame results = model(frame) # Visualize the results on the frame annotated_frame = results[0].plot() # Write the frame to the video file out.write(annotated_frame) # Display the resulting frame cv2.imshow("Camera", annotated_frame) # Break the loop if 'q' is pressed if cv2.waitKey(1) == ord("q"): break# Release resources and close windowspicam2.close()out.release()cv2.destroyAllWindows()