11. Mediapipe gesture recognition to control car movement

11. Mediapipe gesture recognition to control car movement11.1. Using11.2. Core code analysis HandCtrl.py11.3.Flowchart

11.1. Using

After the function is turned on, the camera captures the image and recognizes the gesture to control the movement of the car.

Note: [R2] of the remote controller has the function of [pause/on] for this gameplay.

Start command (robot side)

x#You need to enter docker first, perform this step more#If running the script to enter docker fails, please refer to 07.Docker-orin/05, Enter the robot's docker container~/run_docker.shroslaunch arm_mediapipe mediaArm.launchstart command (virtual machine)

xxxxxxxxxxrosrun arm_mediapipe HandCtrl.py

After the program starts, press the R2 key on the handle to turn on the function, then put your hand in front of the camera, the screen will draw the shape of your finger, and after the program recognizes the gesture, it will send the speed to the chassis, and then control the movement of the car.

Gesture number 1: the car moves left

Gesture number 2: the car moves right

Gesture number 3: the car rotates left

Gesture number 4: the car rotates right

Gesture number 5: the car moves forward

Gesture fist: the car moves back

Gesture rock (the index finger and the little finger are straight, the others are bent): the buzzer sounds

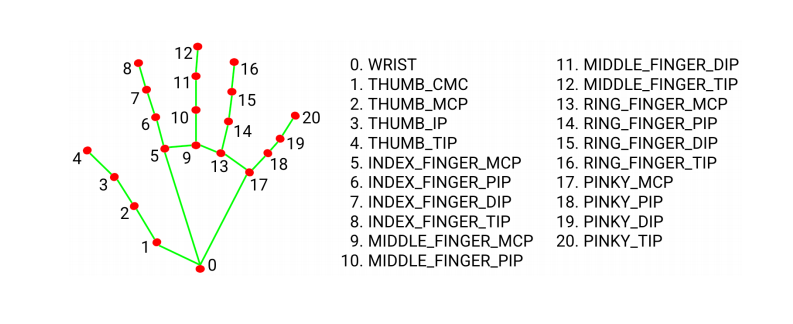

MediaPipe Hands infers 3D coordinates of 21 hand-valued joints from one frame

11.2. Core code analysis HandCtrl.py

Code reference path:~/yahboomcar_ws/src/arm_mediapipe/scripts

Import critical libraries

xxxxxxxxxxfrom media_library import * #This library contains functions to detect hands, get gestures, etc.Get finger data

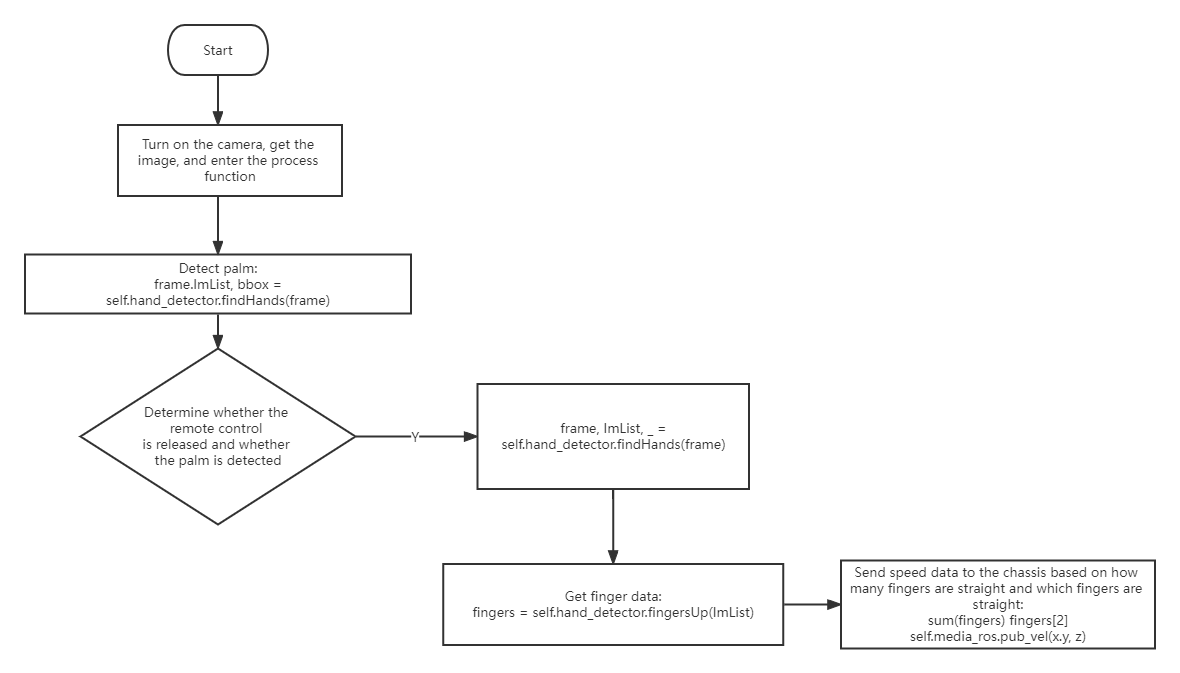

xxxxxxxxxxframe, lmList, _ = self.hand_detector.findHands(frame)fingers = self.hand_detector.fingersUp(lmList)sum(fingers)fingers[]It can be seen that the hand is detected first, the value of lmList is obtained, and then the fingersUp function is passed in. The fingersUp function is used to detect which fingers are straight. The value of the straight finger is 1. The specific code here can also be seen in the media_library, py function, which has a detailed explanation, which is actually to judge the finger joints xy value to judge when it is straightened. The sum(fingers) function is used to calculate the number of straight fingers, fingers[] can be used to enumerate fingers, such as the index finger, we use fingers[1] to represent.

Post speed to chassis

xxxxxxxxxxself.media_ros.pub_vel(x,y,z) #This function is also in media_library,py

11.3.Flowchart