7、Cartographer graph building algorithm

7、Cartographer graph building algorithm7.1、Introduction7.2、use7.2.1、pre-use configuration7.2.2、specific use7.3、node parsing7.3.1、display the computational graph7.3.2、cartographer_node details7.3.3、TF transform

Cartographer:https://google-cartographer.readthedocs.io/en/latest/

Cartographer ROS2:https://github.com/ros2/cartographer_ros

The operating environment and software and hardware reference configurations are as follows:

REFERENCE MODEL: ROSMASTER X3

Robot hardware configuration: Arm series main control, Silan A1 lidar, AstraPro Plus depth camera

Robot system: Ubuntu (version not required) + docker (version 20.10.21 and above)

PC Virtual Machine: Ubuntu (22.04) + ROS2 (Humble)

Usage scenario: Use on a relatively clean 2D plane

7.1、Introduction

Cartographer

Cartographer is a 2D and 3D SLAM (simultaneous localization and mapping) library supported by Google's open source ROS system. Method graph construction algorithm based on graph optimization (multi-threaded backend optimization, problem optimization built by CERE). Data from multiple sensors, such as LIDAR, IMUs, and cameras, can be combined to simultaneously calculate the sensor's position and map the environment around the sensor.

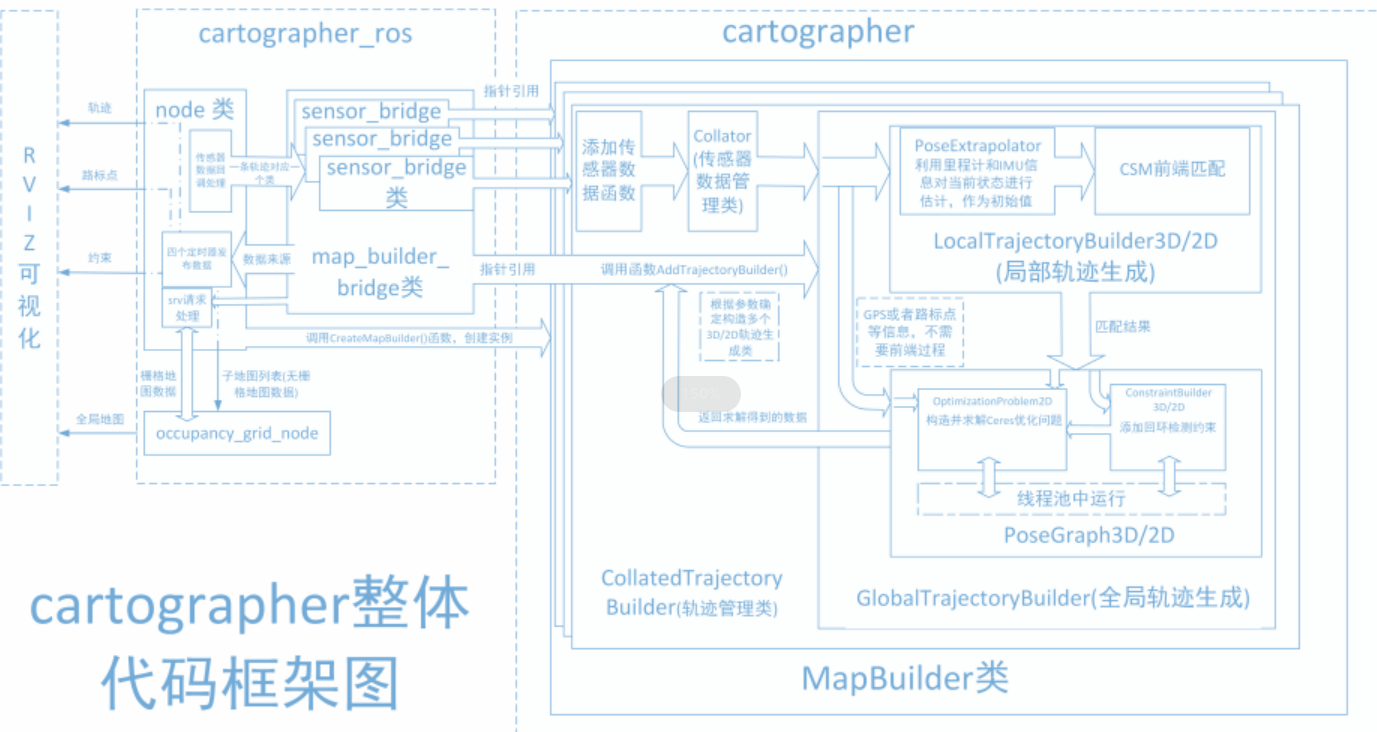

The source code of Cartographer mainly includes three parts: Cartographer, cartographer_ros, and Cers-Solver (back-end optimization).

Cartographer adopts the mainstream SLAM framework, that is, the three-stage of feature extraction, closed-loop detection, and back-end optimization. A certain number of LaserScan forms a submap submap, and a series of submap submaps make up the global map. The cumulative error of the short-term process of building a submap with LaserScan is not large, but the long-term process of building a global map with a submap will have a large cumulative error, so it is necessary to use closed-loop detection to correct the position of these submaps, the basic unit of closed-loop detection is submap, and closed-loop detection adopts a scan_match strategy. The focus of cartographer is the creation of submap submaps that fuse multi-sensor data (odometry, IMU, LaserScan, etc.) and the implementation of scan_match strategies for closed-loop inspection.

cartographer_ros

cartographer_ros is running under ROS and can accept various sensor data in the form of ROS messages

After processing, it is published in the form of a message for easy debugging and visualization.

7.2、use

7.2.1、pre-use configuration

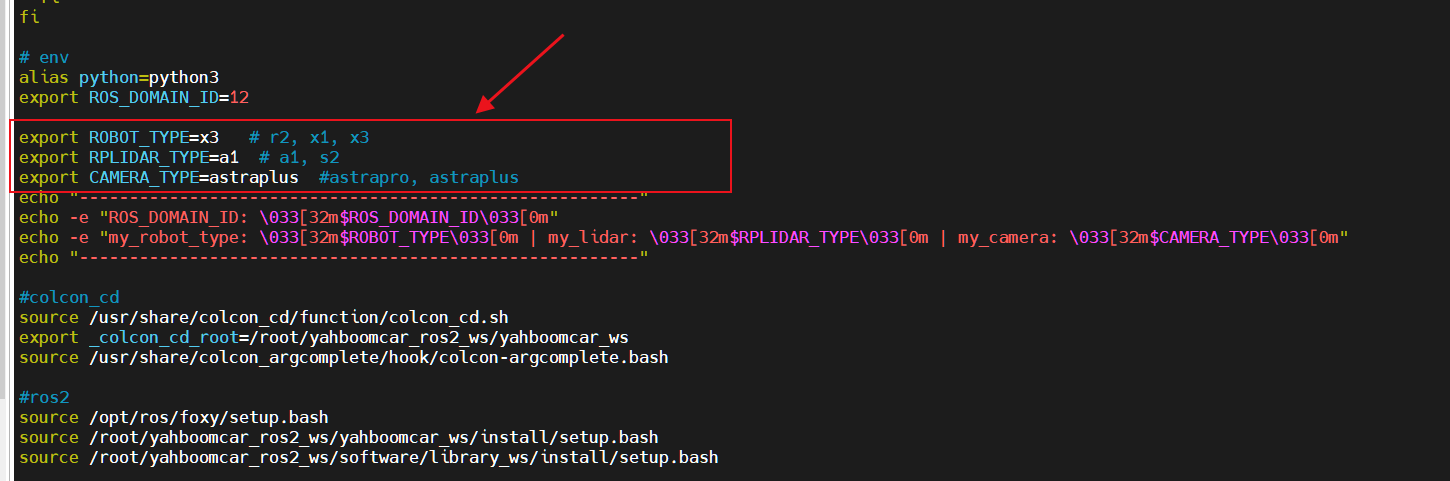

Note: Since ROSMASTER series robots are divided into multiple robots and multiple devices, the factory system has been configured with routines for multiple devices, but because the product cannot be automatically identified, it is necessary to manually set the machine type and radar model.

Raspberry Pi PI5 master control needs to enter the docker container first, Orin master control does not need to enter,

according to the model of the car, the type of radar and the type of camera, make the following modifications:

root@ubuntu:/# cdroot@ubuntu:~# vim .bashrc

After the modification is complete, save and exit vim, and then execute:

xxxxxxxxxxroot@ubuntu:~# source .bashrc--------------------------------------------------------ROS_DOMAIN_ID: 12my_robot_type: x3 | my_lidar: a1 | my_camera: astraplus--------------------------------------------------------root@ubuntu:~#You can see the model of the currently modified car, the type of radar and the type of camera.

7.2.2、specific use

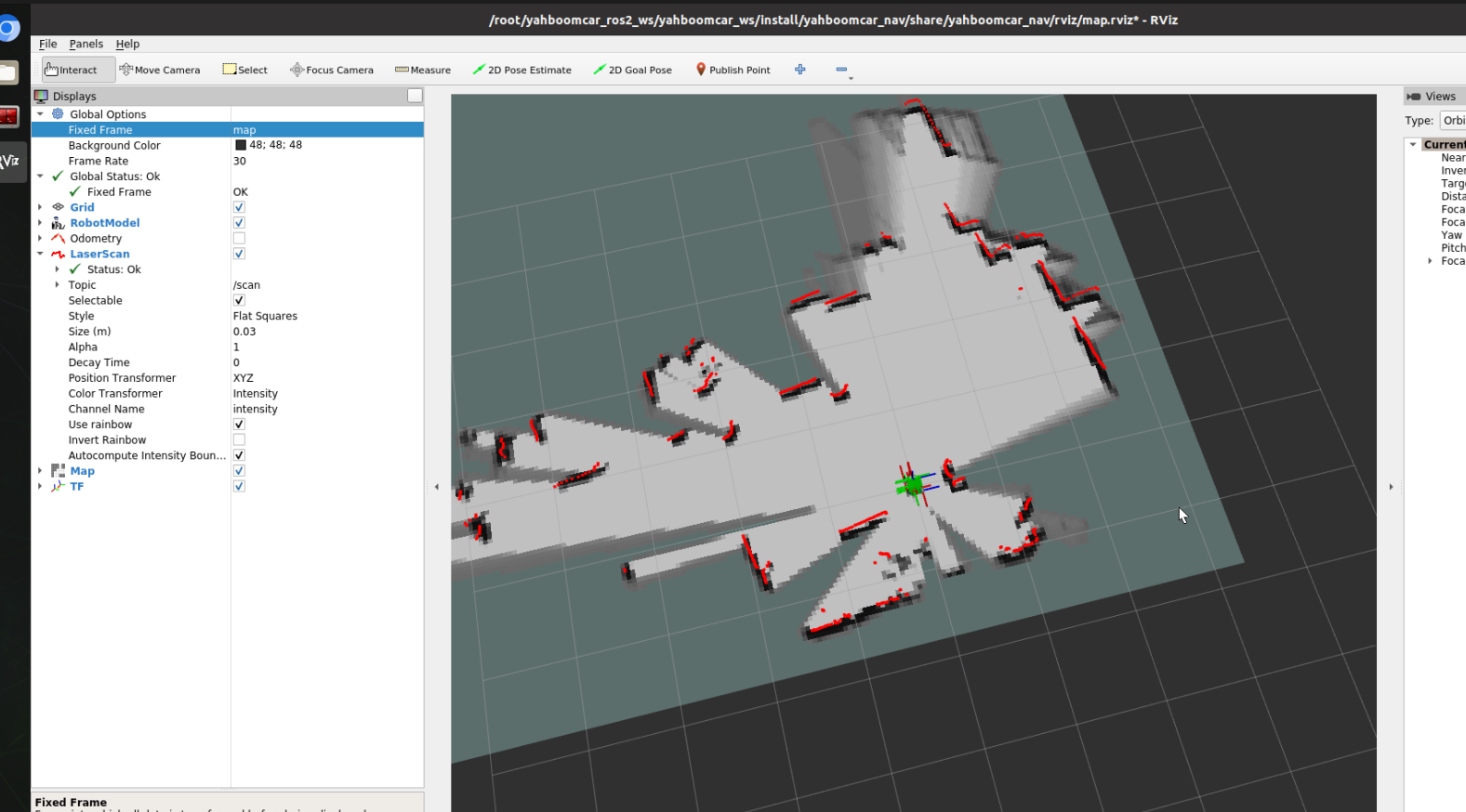

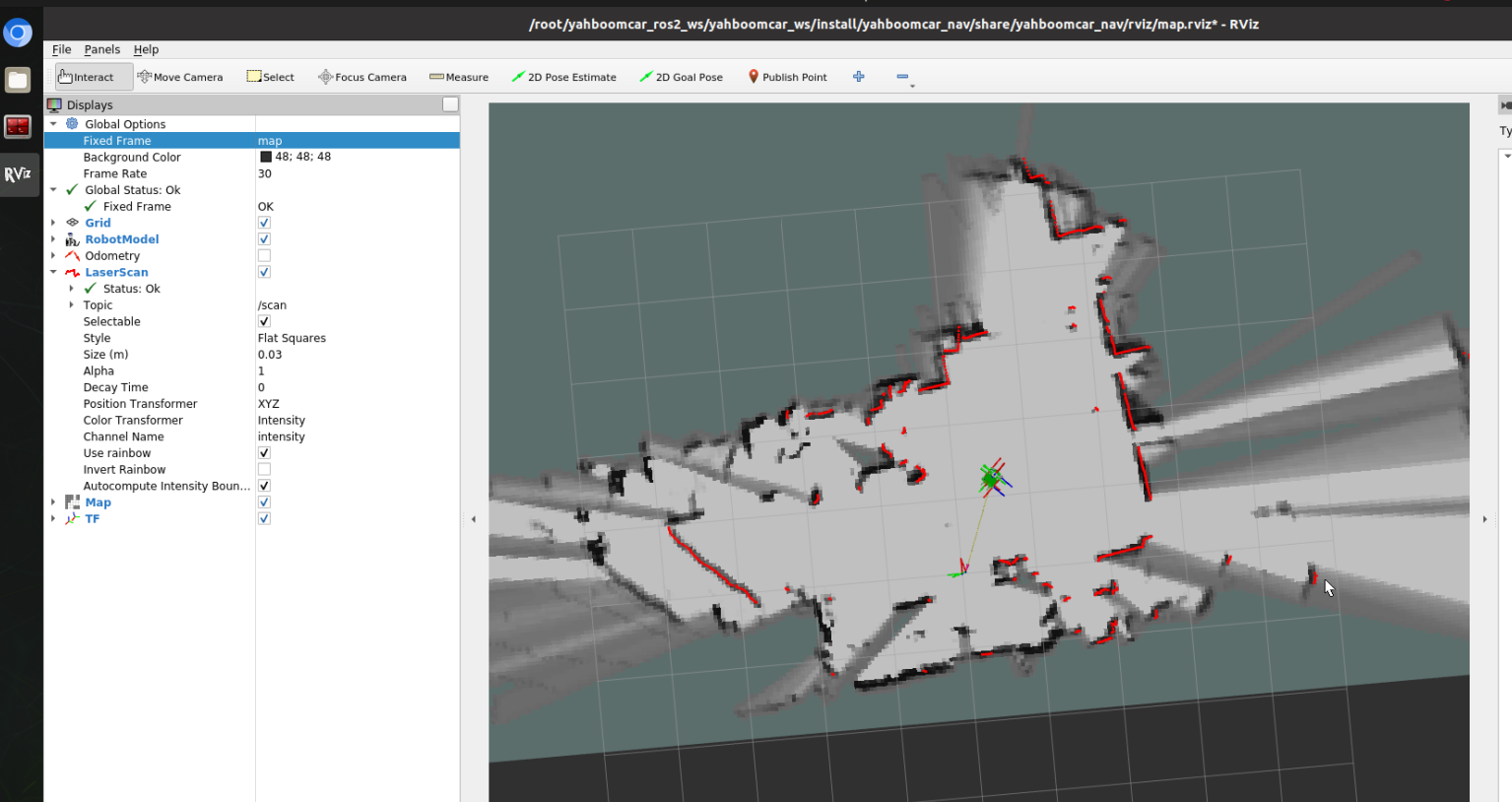

Note: When building the image, the slower the effect, the better (note if the rotation speed is slower), too fast the effect, the effect will be poor.

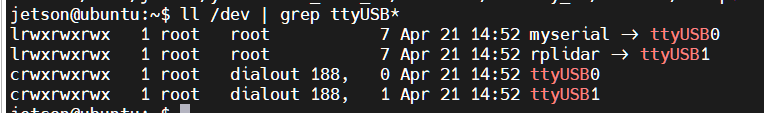

First of all, you need to do port binding operations in the host [that is, on the jetson of the car] [see the port binding tutorial chapter], which mainly uses two devices: radar and serial port;

Then check whether the radar and serial device are in the port binding state: on the host [that is, the jetson of the car], refer to the following command to view, and the successful binding is the following state:

If the radar or serial device is not bound to the display, you can plug and unplug the USB cable to view it again

Raspberry Pi PI5 master needs to enter the docker container first, Orin master does not need to enter,

Enter the docker container (for steps, please refer to [docker course chapter ----- 5. Enter the robot's docker container]),

and execute the following launch file in the terminal:

Start mapping

xxxxxxxxxxros2 launch yahboomcar_nav map_cartographer_launch.py

Start rviz to display the map, this step is recommended to be executed in the virtual machine, and multi-machine communication needs to be configured in the virtual machine

xxxxxxxxxxros2 launch yahboomcar_nav display_map_launch.py

Start the keyboard control node, this step is recommended to be executed in the virtual machine, and multi-machine communication needs to be configured in the virtual machine

Or use the remote control [Move the trolley slowly] to start building the map until the complete map is built

xxxxxxxxxxros2 run yahboomcar_ctrl yahboom_keyboard

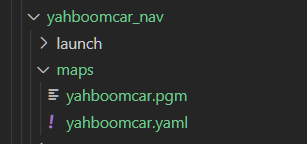

Save the map, the path is as follows:

xxxxxxxxxxros2 launch yahboomcar_nav save_map_launch.py

The save path is as follows:

xxxxxxxxxx~/yahboomcar_ros2_ws/yahboomcar_ws/src/yahboomcar_nav/maps/

A PGM image, a YAML file yahboomcar.yaml

xxxxxxxxxximage: yahboomcar.pgmmode: trinaryresolution: 0.05origin: [-10, -10, 0]negate: 0occupied_thresh: 0.65free_thresh: 0.25

Parameter parsing:

image: The path to the map file, which can be absolute or relative

mode: This attribute can be one of trinary, scale, or raw, depending on the mode selected, trinary mode is the default mode

Resolution: The resolution of the map, meters per pixel

origin: 2D pose (x,y,yaw) in the lower left corner of the map, where yaw is rotated counterclockwise (yaw=0 means no rotation). Many parts of the system today ignore the YAW value.

negate: whether to reverse the meaning of white/black, free/occupy (the interpretation of the threshold is not affected)

occupied_thresh: Pixels with a probability of occupancy greater than this threshold are considered fully occupied.

free_thresh: Pixels with a probability of occupancy less than this threshold are considered completely free.

7.3、node parsing

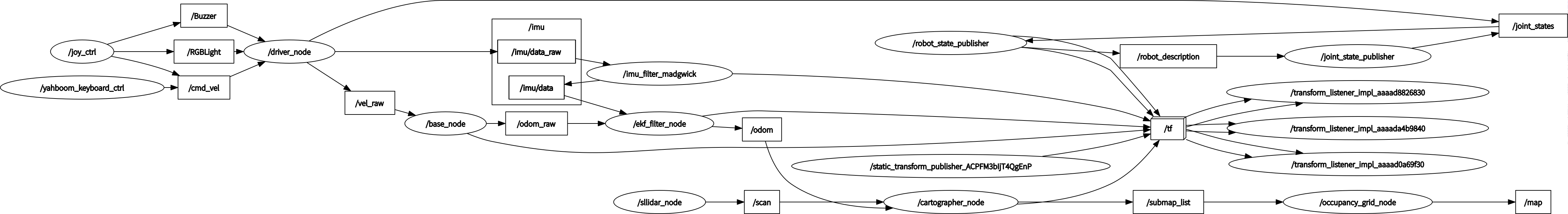

7.3.1、display the computational graph

xxxxxxxxxxrqt_graph

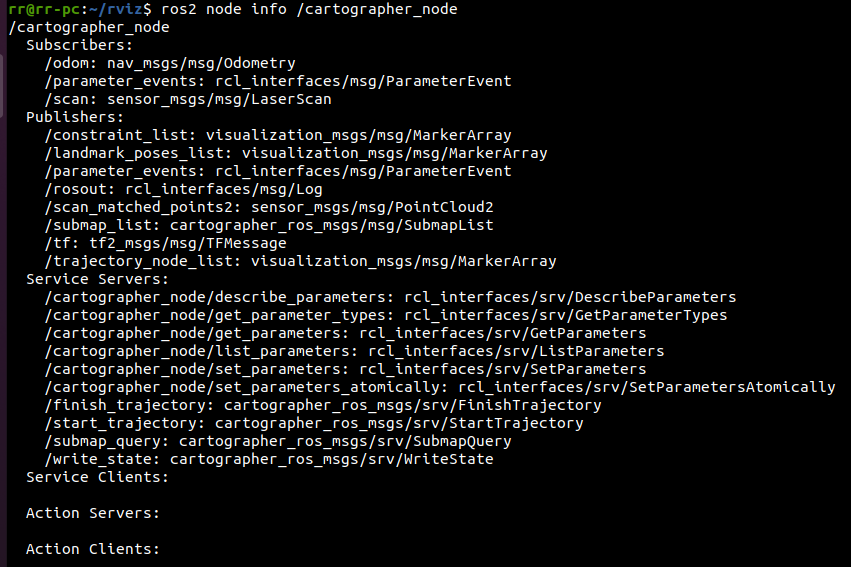

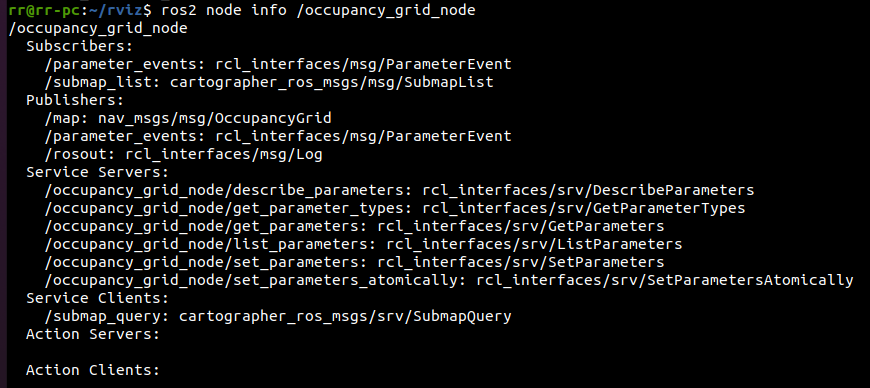

7.3.2、cartographer_node details

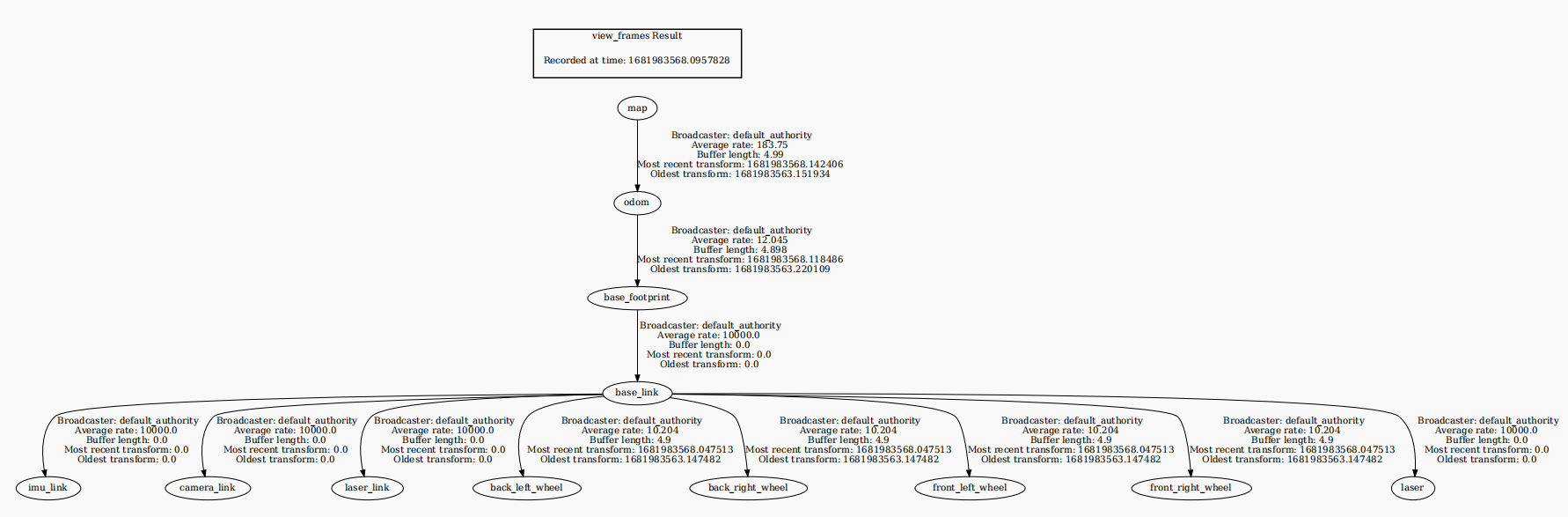

7.3.3、TF transform

xxxxxxxxxxros2 run tf2_tools view_frames.py